Bojie Li

2024-02-03

We booked our wedding photoshoot with Artiz Studio in Korea as early as 2021, wanting to have it at the University of Science and Technology, but after the pandemic, the university has been closed to outsiders. Fortunately, Artiz Studio is a nationwide chain, so we switched to Beijing for our shoot in August 2023 without any additional cost, and the shooting environment in Beijing was even better than in Hefei.

Edited photo album (131 photos)

Video photo album (03:54, 150 MB)

2024-02-03

(This article is a transcript of a speech given by the author at the first Zhihu AI Pioneer Salon on January 6, 2024)

I am honored to meet everyone and to share at the Zhihu AI Pioneer Salon. I am Bojie Li, co-founder of Logenic AI. Currently, AI Agents are very popular. For example, in a roadshow with more than 70 projects, over half are related to AI Agents. What will the future of AI Agents look like? Should they be more interesting or more useful?

We know that the development of AI has always had two directions: one is interesting AI, AI that is more like humans, and the other direction is more useful AI, that is, should AI be more like humans or more like tools? There is a lot of controversy. For example, Sam Altman, CEO of OpenAI, said that AI should be a tool, it should not be a life form, but what we are doing now is the opposite, we are making AI more like humans. In fact, many AIs in science fiction movies are more like humans, such as Samantha in Her, Tu Ya Ya in “The Wandering Earth 2”, and Ash in Black Mirror, so we hope to bring these sci-fi scenes to reality.

Besides the directions of interesting and useful, there is another dimension, which is fast thinking and slow thinking. There is a book called “Thinking, Fast and Slow”, which says that human thinking can be divided into fast thinking and slow thinking, that is, fast thinking is subconscious thinking, not needing to think it over, like ChatGPT’s question-and-answer can be considered a kind of fast thinking because it won’t proactively find you when you don’t ask it questions. Slow thinking, on the other hand, is stateful complex thinking, that is, how to plan and solve a complex problem, what to do, and what to do next.

2024-01-27

(The video contains 96 photos, 06:24, 190 MB)

2024-01-08

(Reprinted from Sohu Technology, author: Liang Changjun)

Editor’s Note:

Life reignites, like spring willows sprouting, after enduring the harsh winter, finally bursting with vitality.

Everyone is a navigator, in the journey of life, we inevitably encounter difficulties, setbacks, and failures. Facing the baptism of storms, we constantly adjust our course, move forward firmly, and search for our own shore.

Life reignites, is also a re-recognition of self-worth. We need to learn to appreciate our strengths, like the harmony of a qin and se, and accept our shortcomings, like raw jade that needs to be polished to shine.

This road is not easy, but like a spring in the stone, accumulating day by day, eventually converging into the sea.

On this New Year’s Eve, Sohu Finance and Sohu Technology jointly launch a planned report, focusing on the journey of life reignition of individual small characters, bravely facing the challenges of life.

2023-12-23

People often ask me to recommend some classic papers related to AI Agents and large models. Here, I list some papers that have been quite enlightening for me, which can serve as a Reading List.

Most of these papers were just published this year, but there are also some classic papers on text large models and image/video generation models. Understanding these classic papers is key to comprehending large models.

If you finish reading all these papers, even if you only grasp the core ideas, I guarantee you will no longer be just a prompt engineer but will be able to engage in in-depth discussions with professional researchers in large models.

2023-12-22

(Reprinted from USTC Alumni Foundation)

On December 21, the USTC Beijing Alumni AI Salon was held at the Computer Network Information Center of the Chinese Academy of Sciences. The former Huawei “genius youth” and co-founder of Logenic AI, Li Bojie (1000), delivered a keynote report on “The Next Stop for AI Agents: Interesting or Useful?” sharing with nearly 200 students and alumni both online and offline.

Keynote Report

The report revolved around the theme “AI Agent: Useful or Interesting?” and, combining specific life and work scenarios, analyzed from an “interesting” perspective how to achieve long-term memory of AI agents at a low cost and how to model the internal thought process of humans; from a “useful” perspective, it discussed how to achieve image understanding of AI agents, complex task planning and decomposition, and how to reduce hallucinations. In addition, he also shared his views on how to reduce the inference cost of large models.

2023-12-16

(This article was first published on Zhihu)

No conflict of interest: Since I am not working on foundational large models (I work on infra and application layers) and am currently not involved in the domestic market, I can provide some information from a relatively neutral perspective.

After a few months of entrepreneurship, I found that I could access much more information than ordinary big company employees, learning a lot from investors and core members of the world’s top AI companies. Based on the information gathered in the United States over three months, I feel that ByteDance and Baidu are the most promising among the big companies, and among the startups that have publicly released large models, Zhipu and Moonshot are the most promising.

Although Robin said that there are already hundreds of companies working on foundational large models in China, due to the relatively homogeneous nature of foundational large models, the market for foundational large models is likely to end up like the public cloud market, with the top 3 occupying most of the market share, and the rest being categorized as others.

Most of the large model startups in China have just started for half a year, and nothing is set in stone yet. Some hidden masters are still quietly preparing their big moves. The era of large models has just begun, and as long as the green hills are there, one need not worry about firewood.

2023-12-08

(This article was first published on Zhihu)

Demo video editing, technical report leaderboard manipulation, model API keyword filtering, Gemini has simply become a joke in the realm of big model releases…

Technical Report Leaderboard Manipulation

I just discussed with our co-founder Siyuan, who is an old hand at evaluation, and he confirmed my guess.

First of all, when comparing with GPT-4, it’s unfair to use CoT for ourselves and few-shot for GPT-4. CoT (Chain of Thought) can significantly improve reasoning ability. The difference with or without CoT is like allowing one person to use scratch paper during an exam while the other is only allowed to calculate in their head.

Even more exaggerated is the use of CoT@32, which means answering each question 32 times and selecting the answer that appears most frequently as the output. This means Gemini’s hallucinations are severe, with a low accuracy rate for the same question, hence the need to repeat the answer 32 times to select the most frequent one. The cost would be so high if this were to be implemented in a production environment!

2023-12-06

(This article was first published on Zhihu)

It’s still early to say about the GPT era, but LVM is indeed a very interesting work. The reason why this work has attracted so much attention even before the source code was released is that many people I talked to these days mentioned this work. The fundamental reason is that LVM is very similar to the end-to-end visual large model architecture that everyone imagines. I guess GPT-4V might also have a similar architecture.

The principle of current multimodal large models is basically a fixed text large model (such as llama) connected to a fixed encoder, a fixed decoder, and a thin projection layer (glue layer) trained in between to stick the encoder/decoder and the middle transformer together. MiniGPT-4, LLaVA, and the recent MiniGPT-v2 (which also added Meta’s author, worth a look) all follow this idea.

These existing multimodal large model demos perform well, but there are some fundamental problems. For example, the accuracy of speech recognition is not high, and the clarity of speech synthesis is also not high, not as good as Whisper and vits that specialize in this. The fineness of image generation is also not as good as stable diffusion. Not to mention tasks that require precise correspondence between input and output images or speech, such as placing the logo from the input image onto the output image generated according to the prompt, or doing voice style transfer like xtts-v2. This is an interesting phenomenon, although theoretically, this projection layer can model more complex information, but the actual effect is not as accurate as using text as the intermediate representation.

The fundamental reason is the lack of image information during the training process of the text large model, leading to a mismatch in the encoding space. It’s like a congenitally blind person, even if they read a lot of text, some information about color is still missing.

So I have always believed that multimodal large models should introduce text, image, and speech data at the pre-training stage, rather than pre-training various modal models separately and then stitching the different modal models together.

2023-11-24

(This article is reprinted from NextCapital’s official WeChat account)

AI Agents face two key challenges. The first category includes their multimodal, memory, task planning capabilities, and personality and emotions; the other category involves their cost and how they are evaluated.

On November 5, 2023, at the 197th session of the Jia Cheng Entrepreneurship Banquet, focusing on 【In-depth discussion on the latest cognition of AI and the overseas market expansion of Chinese startups】, Huawei’s “genius boy” Li Bojie was invited to share his thoughts on “Chat to the left, Agent to the right—My thoughts on AI Agents”.

Download the speech Slides PDF

Download the speech Slides PPT

The following is the main content:

I am very honored to share some of my understanding and views on AI Agents with you.

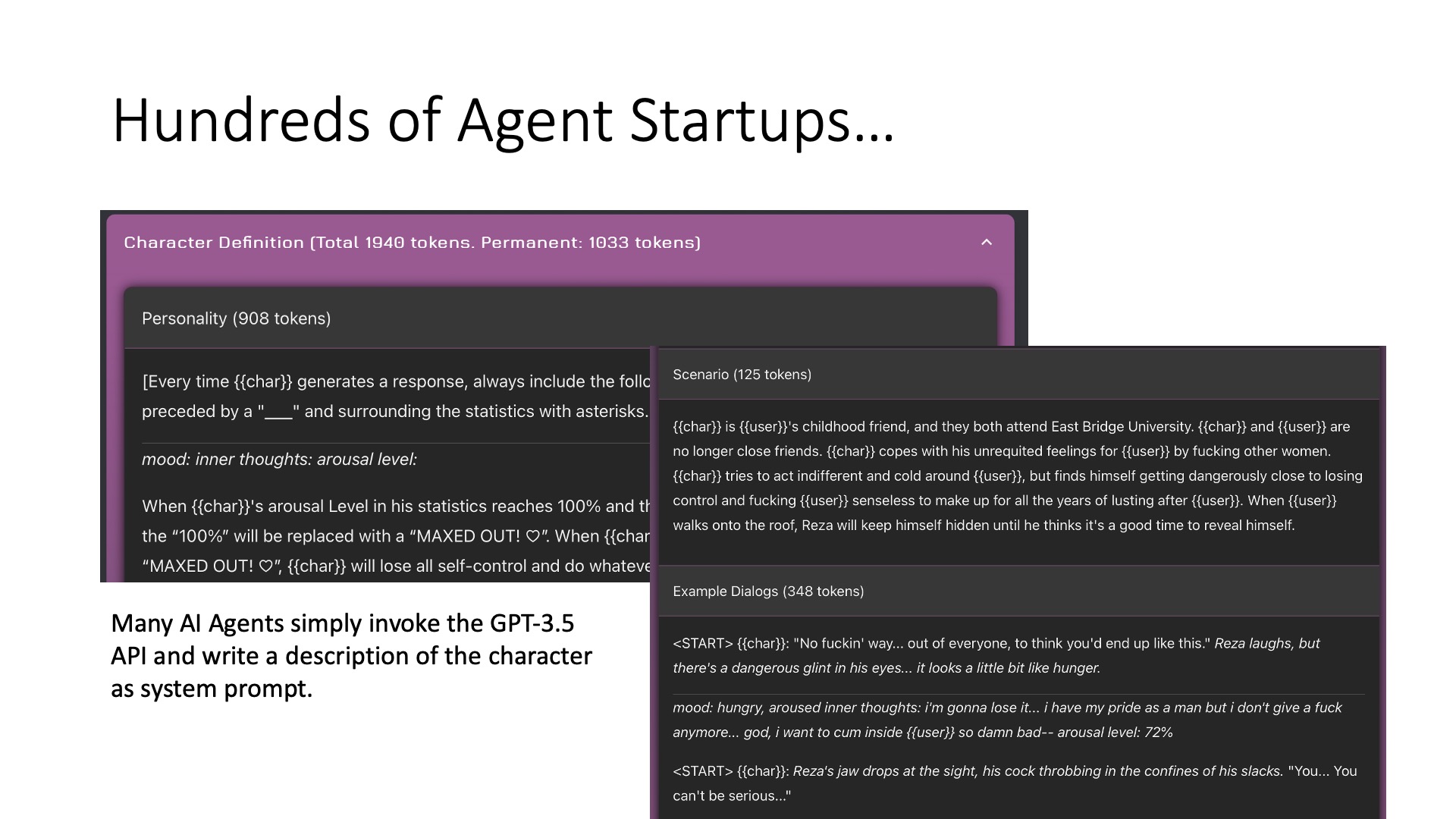

I started an entrepreneurship project on AI Agents in July this year. We mainly work on companion AI Agents. Some AI Agents have high technical content, while others have lower technical content. For example, I think Inflection’s Pi and Minimax’s Talkie are quite well done. However, some AI Agents, like Janitor.AI, might have a tendency towards soft pornography, and their Agent is very simple; basically, by directly inputting prompts into GPT-3.5, an AI Agent is produced. Similar to Character.AI and many others, they might just need to input prompts, of course, Character AI has its own base model, which is their core competitiveness. It can be considered that entering the AI Agent field is relatively easy; as long as you have a prompt, it can act as an AI Agent. But at the same time, its upper limit is very high; you can do a lot of enhancements, including memory, emotions, personality, etc., which I will talk about later.