Bojie Li

2026-01-16

【The following content was organized by AI based on the recording, with no modifications made】

2026-01-11

This document provides a series of carefully designed AI Agent practical projects, covering three difficulty levels from easy to hard. These projects are intended to help students deeply understand the core technologies and design patterns of AI Agents, including tool use, multi-agent collaboration, long-term memory management, externalized learning, and other frontier topics. Each project includes clear experimental objectives, detailed descriptions of the experimental content, and specific acceptance criteria, ensuring that students can master the key skills needed to build advanced AI Agent systems through hands-on practice.

The projects are divided into three levels by difficulty. Students are advised to choose appropriate projects according to their own background and improve their abilities step by step.

Project Index

Difficulty: Easy

- Enhancing mathematical and logical reasoning ability using code generation tools

- Natural language interactive ERP Agent

- Werewolf Agent

Difficulty: Medium

- Personal photo search engine

- Intelligent video editing

- PPT generation Agent

- Book translation Agent

- Agent that collects information from multiple websites simultaneously

Difficulty: Hard

- A user memory that understands you better

- Agent that uses a computer while talking on the phone

- Computer operation Agent that gets more proficient the more you use it

- Agent that can create Agents

2026-01-04

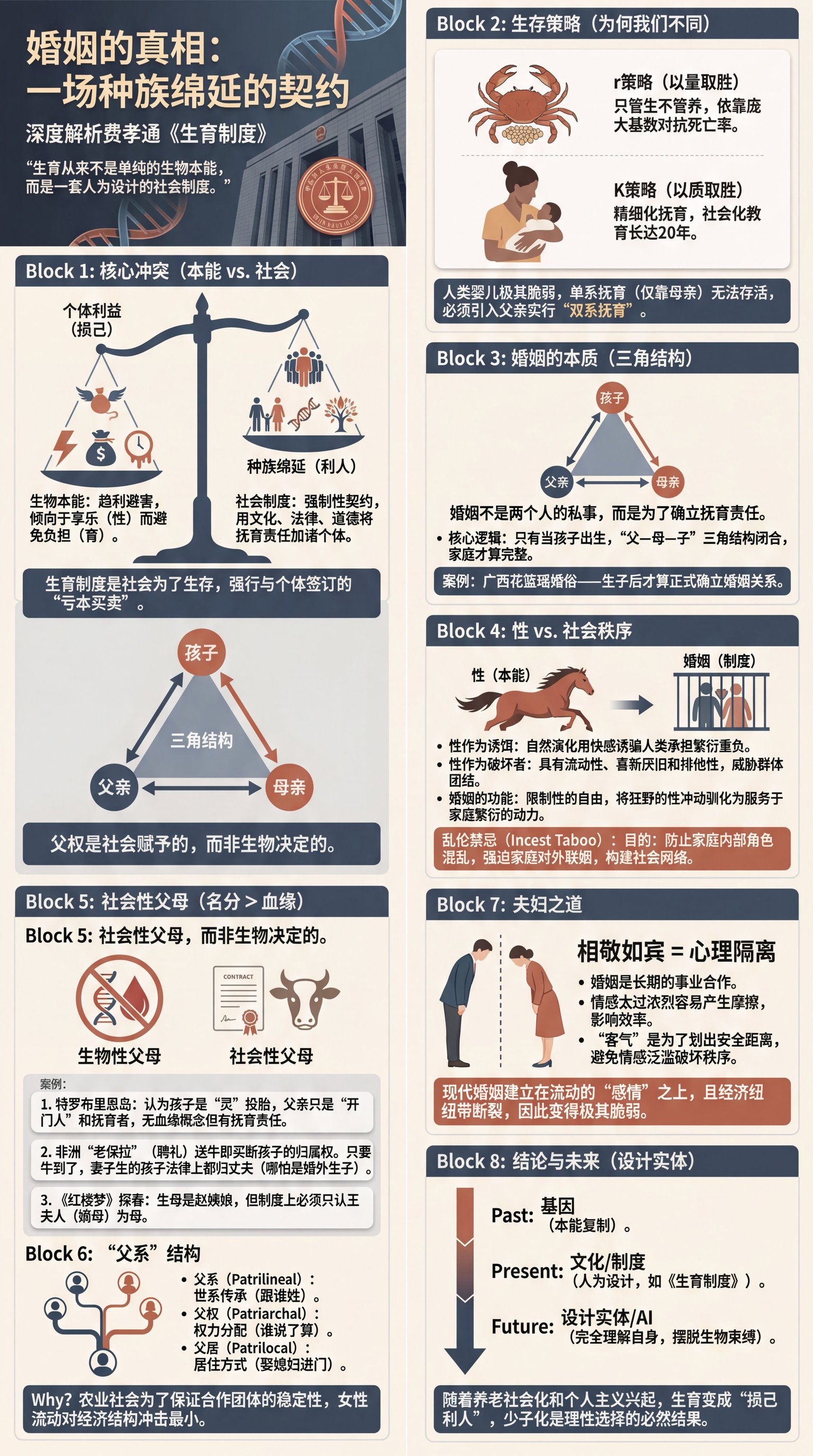

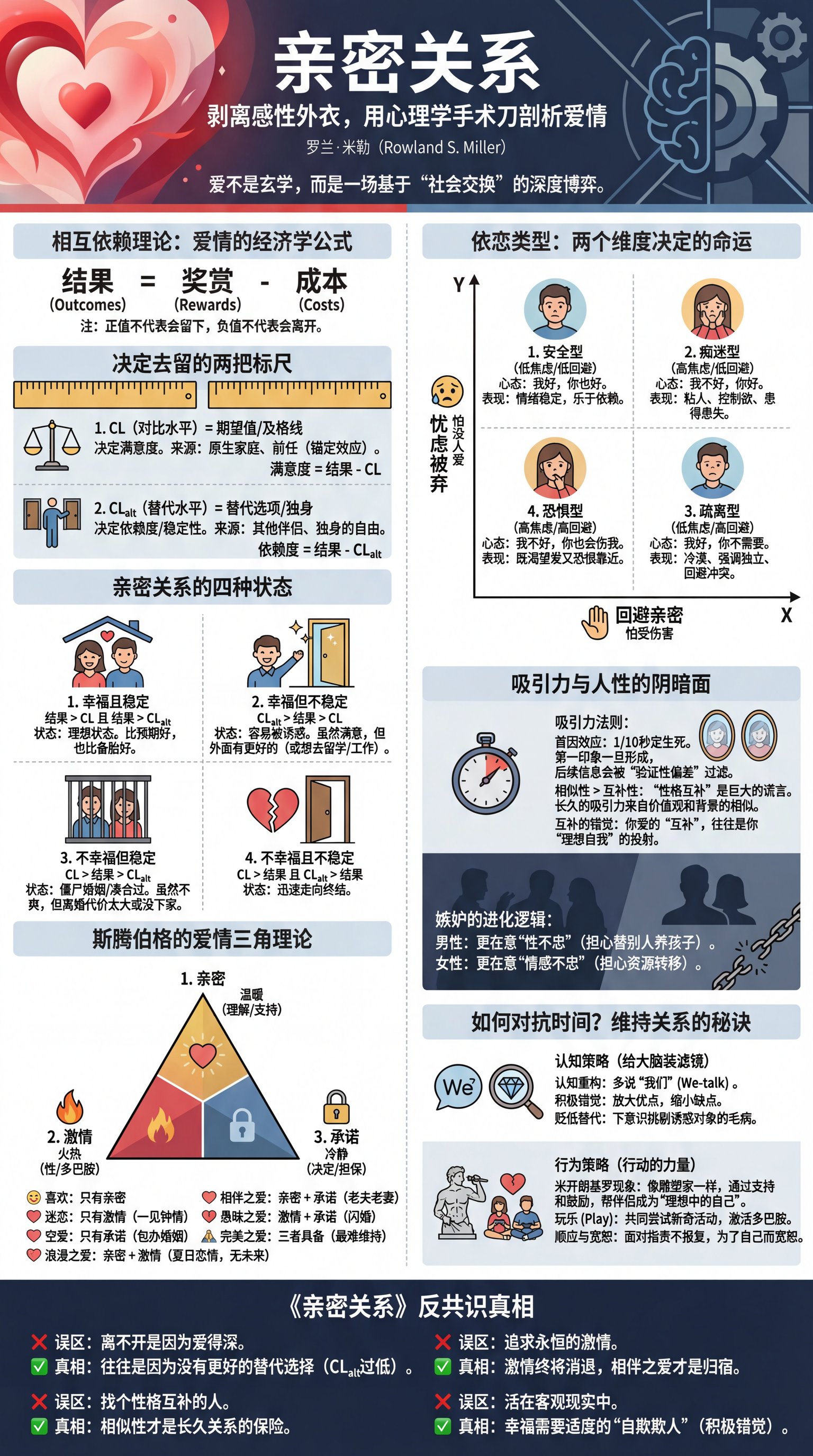

I chatted with an AI for three hours and wrote two sets of reading notes (to test the AI’s capabilities, I deliberately did not make any edits to the AI-generated content).

2025-12-21

This month, the Course Review Community encountered a storage performance issue that lasted nearly two weeks, causing slow service responses and degraded user experience. This post documents how the issue was discovered, investigated, and resolved, covering NFS performance, ZFS logs, Proxmox VE virtualization storage configuration, and more.

2025-12-20

(This article is organized from Anthropic team talks and in-depth discussions during AWS re:Invent 2025)

View Slides (HTML), Download PDF Version (note these slides are not official Anthropic material; I reconstructed them from photos and recordings)

Contents

Claude is already smart enough—intelligence is not the bottleneck, context is. Every organization has unique workflows, standards, and knowledge systems, and Claude does not inherently know any of these. This post compiles Anthropic’s best practices for Context Engineering, covering Skills, Agent SDK, MCP, evaluation systems and other core topics to help you build more efficient AI applications.

- 01 | Skills system - Let Claude master organization-specific knowledge

- 02 | Context Engineering framework - Four pillars for optimizing token utility

- 03 | Context Window & Context Rot - Understand context limits and degradation

- 04 | Tool design best practices - Elements of powerful tools

- 05 | Claude Agent SDK - A framework for production-ready agents

- 06 | Sub-agent configuration best practices - Automatic invocation and permissions

- 07 | MCP (Model Context Protocol) - A standardized protocol for tool integration

- 08 | Evaluations - Why evaluation matters and best practices

- 09 | Lessons from building Coding Agents - What we learned from Claude Code

- 10 | Ecosystem collaboration - How Prompts, MCP, Skills, and Subagents work together

2025-12-20

(This article is the invited talk I gave at the first Intelligent Agent Networks and Application Innovation Conference on December 20, 2025)

View Slides (HTML), Download PDF Version

Abstract

Today’s agent–human interaction is centered on text, but that deviates from natural human cognition. From first principles, the modality humans are best at for output is speech (speaking is three times faster than typing), and the modality humans are best at for input is vision. Vision is not text, but intuitive UI.

The first step is achieving real‑time voice interaction. The traditional serial VAD–ASR–LLM–TTS architecture suffers from having to wait for the user to finish speaking before it can start “thinking,” and it cannot output before the thinking is done. With an Interactive ReAct continuous‑thinking mechanism, the agent can listen, think, and speak at the same time: it starts thinking while the user is talking, and keeps deepening its reasoning while it’s speaking itself, making full use of all idle time gaps.

The second step is to expand the observation space and action space on top of real‑time voice. By extending the Observation Space (from voice input to Computer Use–style visual perception) and the Action Space (from voice output to UI generation and computer control), the agent can operate existing computer/phone GUIs while on a call, and generate dynamic UI to interact with the user. One implementation path for generative UI is generating front‑end code; Claude 4.5 Sonnet has already reached the threshold for this. Another path is generating images; Nano Banana Pro is also close to this threshold.

This is exactly the path used to realize Samantha in the movie Her. As an operating system, Samantha needs five core capabilities: real‑time voice conversation with the user, making phone calls and handling tasks on the user’s behalf, operating traditional computers and phones for the user, bridging data across the user’s existing devices and online services, having her own generative UI interface, and possessing powerful long‑term user memory for personalized proactive services.

2025-12-19

(This article is the invited talk I gave at AWS re:Invent 2025 Beijing Meetup)

Click here to view Slides (HTML), Download PDF version

Thanks to AWS for the invitation, which gave me the opportunity to attend AWS re:Invent 2025. During this trip to the US, I not only attended this world-class tech conference, but was also fortunate enough to have in-depth conversations with frontline practitioners from top Silicon Valley AI companies such as OpenAI, Anthropic, and Google DeepMind. Most of the viewpoints were cross-validated by experts from different companies.

From the re:Invent venue in Las Vegas, to NeurIPS in San Diego, and then to AI companies in the Bay Area, more than ten days of intensive exchanges taught me a great deal. Mainly in the following aspects:

Practical experience of AI-assisted programming (Vibe Coding): An analysis of the differences in efficiency improvement in different scenarios—from 3–5x efficiency gains in startups, to why the effect is limited in big tech and research institutions.

Organization and resource allocation in foundation model companies: An analysis of the strengths and weaknesses of companies like Google, OpenAI, xAI, Anthropic, including compute resources, compensation structure, and the current state of collaboration between model teams and application teams.

A frontline perspective on Scaling Law: Frontline researchers generally believe that Scaling Law is far from over, which diverges from the public statements of top scientists such as Ilya Sutskever and Richard Sutton. Engineering approaches can address sampling efficiency and generalization issues, and there is still substantial room for improvement in foundation models.

Scientific methodology for application development: An introduction to the rubric-based evaluation systems that top AI application companies widely adopt.

Core techniques of Context Engineering: A discussion of three major techniques to cope with context rot: dynamic system prompts, dynamic loading of prompts (skills), sub-agents plus context summarization. Also, the design pattern of using the file system as the agent interaction bus.

Strategic choices for startups: Based on real-world constraints of resources and talent, an analysis of the areas startups should avoid (general benchmarks) and the directions they should focus on (vertical domains + context engineering).

2025-12-18

In the previous article, “Set Up an Install-Free IKEv2 Layer-3 Tunnel to Bypass Cursor Region Restrictions”, we introduced how to use an IKEv2 layer-3 tunnel to bypass geo-restrictions of software like Cursor. Although the IKEv2 solution has the advantage of not requiring a client installation, layer-3 tunnels themselves have some inherent performance issues.

This article will introduce a more efficient alternative: using Clash Verge’s TUN mode together with the VLESS protocol, which keeps things transparent to applications while avoiding the performance overhead brought by layer-3 tunnels.

Performance Pitfalls of Layer-3 Tunnels

The IKEv2 + VLESS/WebSocket architecture from the previous article has three main performance issues:

- TCP over TCP: application-layer TCP is encapsulated and transported inside the tunnel’s TCP (WebSocket), so two layers of TCP state machines interfere with each other

- Head-of-Line Blocking: multiple application connections are multiplexed over the same tunnel; packet loss on one connection blocks all connections

- QoS Limits on Long Connections: a single long-lived connection is easily throttled by middleboxes on the network

2025-10-24

Reinforcement learning pioneer Richard Sutton says that today’s large language models are a dead end.

This sounds shocking. As the author of “The Bitter Lesson” and the 2024 Turing Award winner, Sutton is the one who believes most strongly that “more compute + general methods will always win,” so in theory he should be full of praise for large models like GPT-5, Claude, and Gemini. But in a recent interview, Sutton bluntly pointed out: LLMs merely imitate what humans say; they don’t understand how the world works.

The interview, hosted by podcaster Dwarkesh Patel, sparked intense discussion. Andrej Karpathy later responded in writing and further expanded on the topic in another interview. Their debate reveals three fundamental, often overlooked problems in current AI development:

First, the myth of the small-world assumption: Do we really believe that a sufficiently large model can master all important knowledge in the world and thus no longer needs to learn? Or does the real world follow the large-world assumption—no matter how big the model is, it still needs to keep learning in concrete situations?

Second, the lack of continuous learning: Current model-free RL methods (PPO, GRPO, etc.) only learn from sparse rewards and cannot leverage the rich feedback the environment provides. This leads to extremely low sample efficiency for Agents in real-world tasks and makes rapid adaptation difficult.

Third, the gap between Reasoner and Agent: OpenAI divides AI capabilities into five levels, from Chatbot to Reasoner to Agent. But many people mistakenly think that turning a single-step Reasoner into a multi-step one makes it an Agent. The core difference between a true Agent and a Reasoner is the ability to learn continuously.

This article systematically reviews the core viewpoints from those two interviews and, combined with our practical experience developing real-time Agents at Pine AI, explores how to bridge this gap.

2025-10-16

View Slides (HTML), Download PDF Version

Contents

- 01 | The Importance and Challenges of Memory - Personalization Value · Three Capability Levels

- 02 | Representation of Memory - Notes · JSON Cards

- 03 | Retrieval of Memory - RAG · Context Awareness

- 04 | Evaluation of Memory - Rubric · LLM Judge

- 05 | Frontier Research - ReasoningBank

Starting from personalization needs → Understanding memory challenges → Designing storage schemes → Implementing intelligent retrieval → Scientific evaluation and iteration