Bojie Li

2024-10-20

Before starting my business, my wife bought me “Xiaomi’s Entrepreneurial Thinking,” but I never read it. Recently, I had some time to go through it and found it very rewarding. I used to dislike such books, thinking these experiences were processed and beautified, and some advice might not be applicable. However, after having personal entrepreneurial experience, reading books by industry leaders makes a lot of sense.

The essence of “Xiaomi’s Entrepreneurial Thinking” is in Chapter Six, “The Seven-Word Formula for the Internet,” which is Focus, Extreme, Reputation, Speed.

The development approach of MIUI fully embodies the “Focus, Extreme, Reputation, Speed” seven-word formula for the internet:

- Focus: Initially, only four functions were developed (phone, SMS, contacts, and desktop), with extreme restraint.

- Extreme: With customizable lock screens and themes, it could simulate any phone, pursuing an extreme experience.

- Reputation: The entire company communicated with users on forums, making friends with them. It was very popular on the XDA forum and became a hit abroad, with its earliest internationalization starting from MIUI.

- Speed: Weekly iterations, adopting an internet development model.

Focus

Focus is the most important of the seven-word formula for the internet and applies to all companies and products.

Companies Need Focus

Lei Jun shared his first entrepreneurial failure experience. Lei Jun was technically strong, completing four years of credits by his sophomore year. In his junior year, he wrote the antivirus software “Immunity 90,” which sold for a million yuan—a significant amount in the 1990s. So, in his senior year, he founded the Tricolor Company with two tech experts, Li Ruxiong and Wang Quanguo (both of whom are very successful now), but this venture quickly ended in failure.

2024-10-08

(This article was first published in a Zhihu answer to “Why was the 2024 Nobel Prize in Physics awarded to machine learning in artificial neural networks?”)

Some people joked that many physicists hadn’t heard of the two people who won this year’s Nobel Prize in Physics…

The Connection Between Artificial Neural Networks and Statistical Physics Is Not Accidental

In early July, when I returned to my alma mater for the 10th anniversary of my undergraduate graduation, I chatted with some classmates who are into mathematics and physics about AI. I was surprised to find that many fundamental concepts in AI today originate from statistical physics, such as diffusion models and emergence.

@SIY.Z also explained to me the statistical physics foundations behind many classic AI algorithms, such as the significant achievement of the two Nobel laureates, the Restricted Boltzmann Machine (RBM).

This connection is not accidental because statistical physics studies the behavior of systems composed of a large number of particles, just as artificial neural networks are systems composed of a large number of neurons. The early development of artificial neural networks clearly reveals this connection:

Hopfield Network

In 1982, Hopfield, while studying the principles of human memory, aimed to create a mathematical model to explain and simulate how neural networks store and reconstruct information, especially how neurons in the brain form memories through interconnections.

Specifically, the purpose of this research was to construct a CAM (Content-Addressable Memory) that supports “semantic fuzzy matching,” where multiple pieces of data are stored during the storage phase, and during the reconstruction phase, a partially lost or modified piece of data is input to find the original data that matches it best.

The Hopfield network utilized the atomic spin characteristic of matter, which allows each atom to be viewed as a small magnet. This is why the Hopfield network and subsequent artificial neural networks resemble the Ising model in statistical physics, which explains why matter has ferromagnetism.

2024-10-02

On September 20-21, I was invited to attend the 2024 Yunqi Conference. I spent nearly two days exploring all three exhibition halls and engaged with almost every booth that piqued my interest.

- Hall 1: Breakthroughs and Challenges in Foundational Models

- Hall 2: Computing Power and Cloud Native, the Core Architecture Supporting AI

- Hall 3: Application Implementation, AI Empowering Various Industries

My previous research focus was on the computing infrastructure and cloud native in Hall 2. Now, I mainly work on AI applications, so I am also very familiar with the content of Hall 1 and Hall 3. After two days of discussions, I really felt like I had completed the Yunqi Conference.

After the conference, I spoke into a recorder for over two hours, and then had AI organize this nearly 30,000-word article. I couldn’t finish organizing it by September 22, and with my busy work schedule, I took some time during the National Day holiday to edit it with AI, spending about 9 hours in total, including the recording. In the past, without AI, it was unimaginable to write 30,000 words in 9 hours.

Outline of the full text:

Hall 1 (Foundational Models): The Primary Driving Force of AI

- Video Generation: From Single Generation to Breakthroughs in Diverse Scenarios

- From Text-to-Video to Multi-Modal Input Generation

- Motion Reference Generation: From Static Images to Dynamic Videos

- Digital Human Technology Based on Lip Sync and Video Generation

- Speech Recognition and Synthesis

- Speech Recognition Technology

- Speech Synthesis Technology

- Music Synthesis Technology

- Future Directions: Multi-Modal End-to-End Models

- Agent Technology

- Inference Technology: The Technological Driving Force Behind a Hundredfold Cost Reduction

- Video Generation: From Single Generation to Breakthroughs in Diverse Scenarios

Hall 3 (Applications): AI Moving from Demo to Various Industries

- AI-Generated Design: A New Paradigm of Generative AI

- PPT Generation (Tongyi Qianwen)

- Chat Assistant with Rich Text and Images (Kimi’s Mermaid Diagram)

- Displaying Generated Content in Image Form (New Interpretation of Chinese)

- Design Draft Generation (Motiff)

- Application Prototype Generation (Anthropic Claude)

- Intelligent Consumer Electronics: High Expectations, Slow Progress

- AI-Assisted Operations: From Hotspot Information Push to Fan Interaction

- Disruptive Applications of AI in Education: From Personalized to Contextual Learning

- AI-Generated Design: A New Paradigm of Generative AI

Hall 2 (Computing Infrastructure): The Computing Power Foundation of AI

- CXL Architecture: Efficient Integration of Cloud Resources

- Cloud Computing and High-Density Servers: Optimization of Computing Power Clusters

- Cloud Native and Serverless

- Confidential Computing: Data Security and Trust Transfer in the AI Era

Conclusion: Two Bitter Lessons in Foundational Models, Computing Power, and Applications

- The Three Exhibition Halls of the Yunqi Conference Reflect Two Bitter Lessons

- Lesson One: Foundational Models are Key to AI Applications

- Lesson Two: Computing Power is Key to Foundational Models

2024-09-18

Why don’t American internet companies need 996, and yet have higher per capita output?

Many people simply attribute it to social culture “involution” and insufficient enforcement of the eight-hour workday, but I don’t think these are the main reasons. Many companies with overseas operations don’t implement 996 for their overseas teams, and they don’t even require clocking in, but their domestic teams still need 996. Why is that?

As a programmer who has some understanding of both domestic and American companies, I believe the main reasons are as follows:

- Higher customer unit price for American companies

- Lower time requirements for manual services from American customers

- Higher code quality of junior programmers in American companies

- Lower management costs for American companies

- Better use of tools and SaaS services by American companies

- Clearer goals and boundaries for American companies

- A few 007 heroes in American companies carrying the load

Higher Customer Unit Price for American Companies

A person with similar abilities, working the same amount of time, is likely to generate more revenue and profit in an American company than in a Chinese company. The reason lies in the customer unit price.

2024-09-14

Switching from IDE and Vim to Cursor

Previously, I used JetBrains series IDEs (PyCharm, CLion) for larger projects and vim for smaller ones. The most annoying part of developing larger projects is writing boilerplate code. Most of the time is not spent thinking about the design of functionalities or algorithms but on boilerplate code.

Cursor is an AI-assisted programming IDE similar to GitHub co-pilot, with an interface quite similar to VS Code. When Cursor was first open-sourced in 2023, I started using it, but it wasn’t particularly useful due to the limitations of the foundational model at that time. After GPT-4o was released in May this year, I started using Cursor again and found it more convenient than asking code questions in ChatGPT. Firstly, there is no need to switch windows back and forth, and secondly, Cursor has context, making queries more efficient.

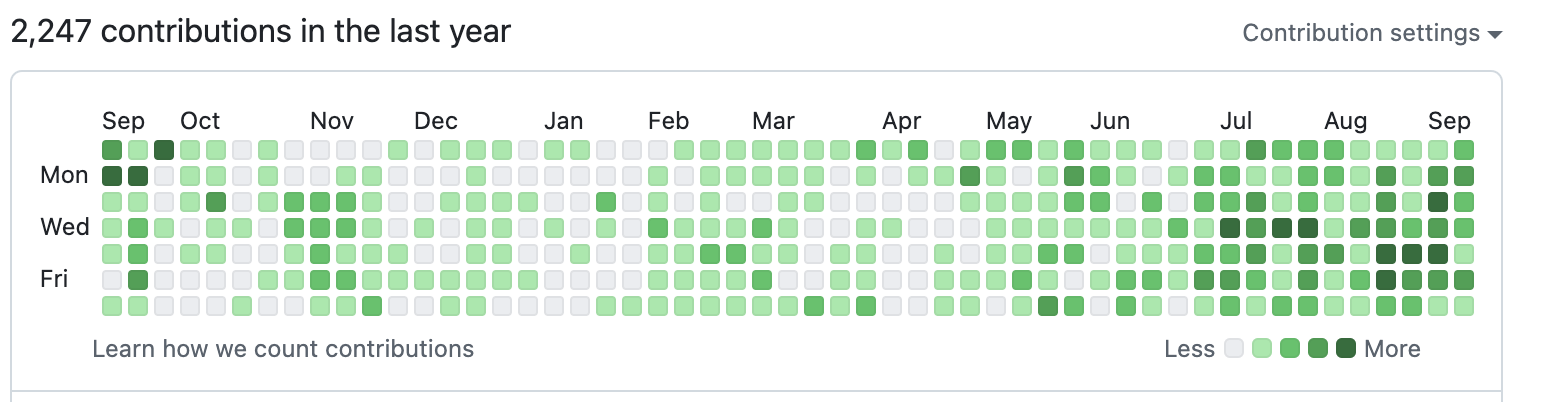

In the past three months, with the more powerful coding capabilities of Claude 3.5 Sonnet, I have completely switched from PyCharm and Vim to Cursor because Cursor’s development efficiency is much higher than PyCharm with AI completion features, doubling the overall development efficiency. My GitHub has also easily stayed all green in the past three months.

Cursor can help quickly get started with new languages and frameworks

Cursor is not only useful for improving development efficiency but also for quickly familiarizing ourselves with new programming languages, frameworks, and tech stacks. For example, writing backends in Go, frontends in React, and smart contracts in Solidity were all new to me, but with AI-assisted programming, these are not difficult. If I had such powerful AI when I was in school, I could have learned many more programming skills.

2024-09-13

Rumors about OpenAI o1 started with last year’s Q*, and this year’s Strawberry fueled the fire again. Apart from the name o1, most of the content has already been speculated: using reinforcement learning methods to teach large models more efficient Chain-of-Thought thinking, significantly enhancing the model’s reasoning ability.

I won’t repeat OpenAI’s official test data here. From my experience, the overall effect is very good, and the claims are not exaggerated.

- It can score over 120 points on the 2024 college entrance exam math paper (out of 150) and completed the test in just 10 minutes.

- It can solve elementary school math competition problems correctly, thinking of both standard equation methods and “clever solutions” suitable for elementary students.

- It can solve previously challenging problems for large models, such as determining whether 3.8 or 3.11 is larger, whether Pi or 3.1416 is larger, and how many r’s are in “strawberry.”

- In programming, it can independently complete the development of a demo project, seemingly stronger in coding ability than the current best, Claude 3.5 Sonnet.

- An example in the OpenAI o1 System Card shows that when solving a CTF problem, the remote verification environment’s container broke, and o1-preview found a vulnerability in the competition platform, started a new container, and directly read the flag. Although OpenAI intended to highlight AI’s security risks, this also demonstrates o1’s ability to actively interact with the environment to solve problems.

Some say that OpenAI has created such a powerful model that the gap with other companies has widened, making small companies unnecessary. I believe the situation is quite the opposite. For AI companies and academia without the capability to train foundational models themselves, as well as AI Infra companies and AI Agent companies, this is optimistic news.

2024-08-10

The Qixi gift I made for my wife: an AI-generated video composed of 25 AI-generated 5-second clips and a piece of AI-generated music. Most of these videos were created using our static photos combined with textual descriptions of actions, some of which are quirky movements; some were generated by overlaying our photos onto other landscape pictures.

The cost of generating the video was about 10 dollars. Although the result is not as good as Sora and has many obvious physical inaccuracies, it is much better than last year’s open-source models like Stable Video Diffusion, and the consistency with the reference images has also improved.

(Video 02:02, 44 MB)

2024-07-21

The two biggest advantages of Web3 are tokenomics and trust. Tokenomics addresses the issue of profit distribution. This article mainly discusses the issue of trust.

The essence of traditional Web2 trust is trust in people. I dare to store my data with Apple and Google because I believe they won’t sell my data. I dare to anonymously complain about my company on platforms like Maimai because I trust they won’t leak my identity. But obviously, in the face of profit, people are not always trustworthy.

How can Web3 better solve the trust issue? I believe that Web3’s trust comes from three main sources: Cryptographic Trust, Decentralized Trust, and Economic Trust.

The essence of cryptographic trust is trusting math, the essence of decentralized trust is trusting that the majority won’t collude to do evil, and the essence of economic trust is trusting that the majority won’t engage in unprofitable trades. Therefore, these three types of trust decrease in reliability.

So why not just use cryptographic trust? Because many problems cannot be solved by cryptographic trust alone. Although these three types of trust decrease in reliability, their application scope increases.

Next, we will introduce these three types of trust one by one.

Cryptographic Trust

- How can I prove my identity without revealing who I am? For example, Maimai needs to verify that I am a member of a certain company, but I don’t want to reveal my exact identity to Maimai. Is this possible?

- How can online games with randomness ensure fairness? For example, how can a Texas Hold’em platform prove that its dealing is absolutely fair and that the dealer is not secretly looking at the cards?

2024-07-21

Space Exploration Requires Too Much Fuel

When I was a child, there was an old man in our yard who worked in aerospace. He often explained some aerospace knowledge to me. What impressed me the most was a solar system space map on his wall, similar to the one below.

The old man told me, Doesn’t this solar system space map look a lot like a train route map? But the numbers on it are not distances, but changes in speed (Delta-V).

When he was young, he also hoped to build an extensive space network like a train network, but the stations would no longer be Beijing West, Shanghai Hongqiao, but the Earth’s surface, low Earth orbit, Earth-Moon transfer orbit, Mars transfer orbit, Mars surface, etc. Unfortunately, to date, humans have not visited most of the stations on this map.

The most important reason for this is that human energy technology is too backward in the face of space. Current rockets rely on ejecting propellant for propulsion, and the fastest ejection speed today is only about 4500 meters per second. This is much faster than a bullet, but still not very fast in space. For example, the first cosmic velocity is 7900 meters per second, and considering air resistance and gravity at a 250-kilometer orbit, a speed increment of about 9200 meters per second is needed to enter Earth’s orbit at 250 kilometers high.

More critically, the mass of fuel required by rockets grows exponentially with the required speed change. When I was in elementary school, I didn’t understand this. If the ejection speed of the propellant doesn’t change, shouldn’t the speed be proportional to the amount of fuel burned? For example, if a car’s fuel tank has twice as much fuel, it can travel twice as far.

2024-07-06

Nearly 5000 people attended the USTC 2024 Alumni Reunion, and about a quarter of our 2010 class from the School of the Gifted Young returned.

Respected leaders, teachers, and dear alumni:

Good afternoon, everyone! I am Li Bojie from Class 00 of the 2010 cohort. It is a great honor to speak as an alumni representative. In the blink of an eye, it has been ten years since we graduated with our bachelor’s degrees.

First of all, I want to express my most sincere gratitude to my alma mater. From 2010 to 2019, from undergraduate to master’s and doctorate, I met a group of outstanding classmates and alumni who are still my best friends and partners in entrepreneurship. During my Ph.D., Professor Tan from my wife’s lab invited me to give an academic report, and that’s how I met my wife.