Networking

2014-09-29

The Trouble with DHCP

The story begins with the update of the network access management device at USTC.

The reason for the unified allocation of IP addresses in public internet access areas is that the IP address segments scattered and allocated by each building are not enough. A few years ago, the main force of the Internet was desktop computers and laptops, and it was impossible to keep them on all the time; but now everyone has smart terminals, possibly more than one, and will connect to the Wi-Fi wherever they go. Many places that were more than enough with the /24 address segment (256 IPs) have encountered situations where IP addresses cannot be allocated during peak periods. The size of USTC’s IP address pool is limited, and centralized allocation has solved the problem of insufficient addresses.

This was originally a happy event for everyone, but the new equipment brought new problems. The library query machine that uses network booting freezes after a period of time. The reason is that the IP addresses allocated during the startup phase of the parent system and the startup phase of the subsystem are different, and this difference is due to the bug of the BRAS network access management device.

2014-04-09

Yesterday, a major security vulnerability named Heartbleed (CVE-2014-0160) was exposed in OpenSSL. Through the TLS heartbeat extension, it is possible to read up to 64 KB of memory on servers running HTTPS services, obtaining potentially sensitive information in memory. As this vulnerability has existed for two years, popular distributions such as Debian stable (wheezy) and Ubuntu 12.04 LTS, 13.04, 13.10, etc. are affected, and countless websites deploying TLS (HTTPS) are exposed to this vulnerability.

What is SSL heartbeat

2014-03-31

I write this article with mixed feelings, because our SIGCOMM paper, which was rushed to the New Year’s Eve, was considered “nothing new” by the reviewers because it was too similar in architecture to this lecture published on March 5 (in fact, our paper contains many technical details not mentioned in this lecture), and had to be withdrawn. How great it would be if Google published their network virtualization technology two months later!

This lecture was given by Amin Vahdat, Google’s Director of Network Technology, at the Open Networking Summit 2014 (video link), introducing the concept of Google’s network virtualization solution, codenamed Andromeda.

2014-03-03

A few days ago, I gave an internal technical sharing session, and the opinions of my colleagues were diverse, so I decided to discuss it with everyone. This article will discuss Google’s network infrastructure plans—Google Fiber and Google Loon, as well as Google’s exploration in network protocols—QUIC, with the ambition to turn the Internet into its own data center.

Wired Network Infrastructure—Google Fiber

The goal of Google Fiber is to bring gigabit internet into thousands of households. With gigabit speed, downloading a 7G movie only takes one minute (if you are still using a mechanical hard drive, you probably won’t have time to store it). Currently, this project is only piloted in two cities in the United States, Kansas and Provo. Google Fiber in these two cities offers three packages, taking Kansas as an example: [1]

- Gigabit network + Google TV: $120/month

- Gigabit network: $70/month

- Free monthly network: 5Mbps download, 1Mbps upload, free monthly rent, but a $300 initial installation fee is required.

The third plan is not as fast as the services provided by most telecom operators in the United States, and most households have already purchased TV services from cable TV operators, so for families that can afford it economically, the comparison of the three packages highlights the “value for money” of the second package ($70/month gigabit network).

There are two points worth arguing here:

- Can everyone have such a fast gigabit network technically?

- Can the $70/month fee recover the cost of Google building a gigabit network?

2014-02-24

[Note: This article is outdated because many authoritative DNS servers have multiple IP addresses for both domestic and international users. They resolve based on the user’s public IP, so simply distinguishing between domestic and international websites using authoritative DNS server IPs is no longer practical. It is recommended to read the new solution in “Setting Up a Local Anti-Pollution DNS for Intelligent Domestic and International Website Traffic”.]

DNS service is a crucial foundational service of the internet, but its importance is often underestimated. For example, in August 2013, the .cn root domain servers were attacked by DDoS, causing .cn domains to be inaccessible. On January 21, 2014, the root domain servers were polluted by a famous firewall, causing all international domains to be inaccessible. Many internationally renowned websites are inaccessible in mainland China partly due to DNS pollution, which returns incorrect IP addresses for domain names.

Setting up an anti-pollution DNS is not as simple as using a VPN to resolve all domain names. There are mainly two issues:

2014-02-23

I helped a friend with port mapping and encountered two pitfalls since I haven’t touched iptables for a few months. I’d like to share them with you.

2014-02-15

Network Virtualization is the creation of a virtual network that differs from the physical network topology. For example, a company has multiple offices around the world, but wants the company’s internal network to be a whole, which requires network virtualization technology.

Starting from NAT

2013-09-04

Is IP Enough?

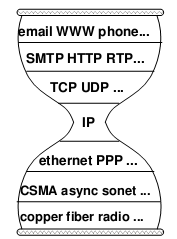

Starting from middle school computer classes, we have been learning about the so-called “OSI seven-layer model” of computer networks, and I remember memorizing a lot of concepts back then. Those rotten textbooks have ruined many computer geniuses. In fact, this model is not difficult to understand: (those who have studied computer networks can skip this)

- Physical Layer: This is the medium for signal transmission, such as optical fiber, twisted pair (the network cable we commonly use), air (wifi)… Each medium requires its own encoding and modulation methods to convert data into electromagnetic waves for transmission.

- Data Link Layer: Let’s use an analogy. When speaking, you might accidentally say something wrong or hear something wrong, so you need a mechanism to correct errors and ask the other party to repeat (checksum, retransmission); when several people want to speak, you need a way to arbitrate who speaks first and who speaks later (channel allocation, carrier listening); a person needs to signal before and after speaking, so that others know he has finished speaking (framing).

- Network Layer: This was the most controversial place in the early days of computer networks. Traditional telecom giants believed that a portion of the bandwidth should be reserved on the path between the two endpoints, establishing a “virtual circuit” for communication between the two parties. However, during the Cold War, the U.S. Department of Defense required that the network being established should not be interrupted even if several lines in the middle were destroyed. Therefore, the “packet switching” scheme was finally adopted, dividing the data into several small pieces for separate packaging and delivery. Just like mailing a letter, if you want to deliver it to a distant machine, you need to write the address on the envelope, and the address should allow the postman to know which way to go to deliver it to the next level post office (for example, using the ID number as the address is a bad idea). The IP protocol is the de facto standard for network layer protocols, and everyone should know the IP address.

- Transport Layer: The most important application of computer networks in the early days was to establish a “connection” between two computers: remote login, remote printing, remote file access… The transport layer abstracts the concept of connection based on network layer data packets. The main difference between this “connection” and “virtual circuit” is that the “virtual circuit” reserves a certain bandwidth, while the “connection” is best-effort delivery, without any guarantee of bandwidth. Since most of the traffic on the Internet is bursty, packet switching improves resource utilization compared to virtual circuits. In fact, history often repeats itself. Nowadays, in data centers, due to predictable and controllable traffic, we are returning to the centrally controlled bandwidth reservation scheme.

- Application Layer: There is no need to say more about this, HTTP, FTP, BitTorrent that the Web is based on are all application layer protocols.

2013-08-03

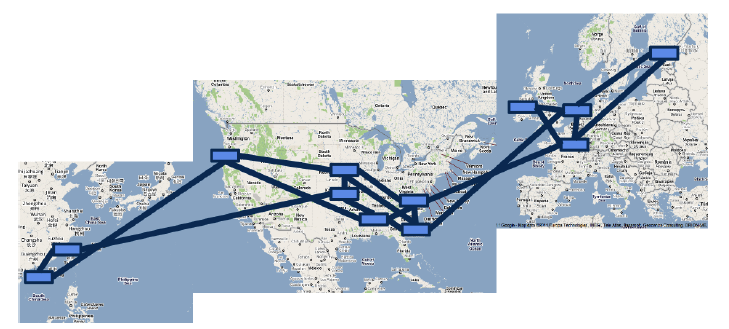

Introduction: This is the second article in the “Entering SIGCOMM 2013” series. For the first time, Google has fully disclosed the design and three-year deployment experience of its data center wide area network (WAN), this paper may be rated as Best Paper. Why does Google use Software Defined Networking (SDN)? How to gradually deploy SDN to existing data centers? Through the paper, we can glimpse a corner of the iceberg of Google’s global data center network.

Huge Waste of Bandwidth

As we all know, network traffic always has peaks and troughs, with peak traffic reaching 23 times the average traffic. To ensure bandwidth demand during peak periods, a large amount of bandwidth and expensive high-end routing equipment must be purchased in advance, while the average usage is only 30%40%. This greatly increases the cost of the data center.