My Translation "Illustrated Large Models - Principles and Practice of Generative AI" is Now Available

[Thank you to all the readers who sent in over 50 corrections! The readers are really meticulous, finding so many errors, and I am very grateful for the corrections!]

My translation “Illustrated Large Models - Principles and Practice of Generative AI” (Hands-On Large Language Models) was released in May 2025. You can search for “Illustrated Large Models” on platforms like JD.com and Taobao.

Praise for the Book (Chinese Edition)

Many thanks to Yuan Jinhui, founder of Silicon Flow, Zhou Lidong, director of Microsoft Research Asia, Lin Junyang, head of Alibaba Qwen Algorithm, Li Guohao, founder of CAMEL-AI.org community, and Zhong Tai, founder of AgentUniverse, for their wholehearted recommendations!

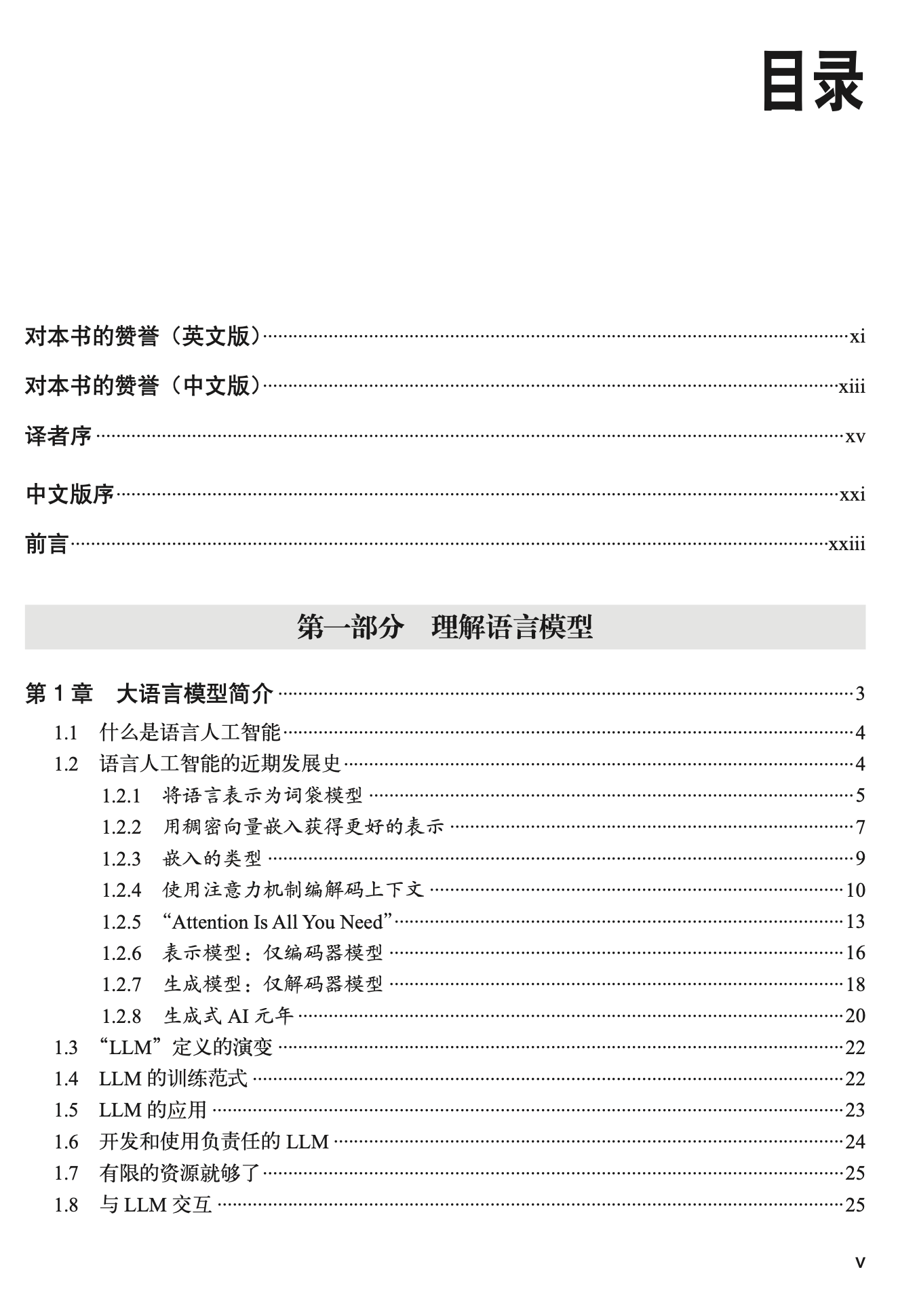

Translator’s Preface

The development of large models is rapid, as the saying goes, “AI advances in a day, while the world takes a year.” Many people find themselves lost in the flourishing garden of models, unsure of which model to use for their application scenarios and unable to predict the development direction of models in the coming year, often feeling anxious. In fact, almost all large models today are based on the Transformer architecture, with all changes stemming from the same origin.

This book, “Illustrated Large Models,” is an excellent resource to help you systematically understand the basic principles and capability boundaries of Transformers and large models. When Turing Company approached me to translate this book, I immediately agreed upon seeing the author’s name, as it was Jay Alammar’s blog post “The Illustrated Transformer” that truly helped me understand Transformers (Chapter 3 of this book is an expansion of that blog post). Although there are countless books and articles explaining large models on the market today, the exquisite illustrations and the depth of explanation in this book are rare. The book starts with tokens and embeddings, not limited to generative models, but also includes representation models that many overlook. Additionally, the book covers practical content such as text classification, text clustering, prompt engineering, RAG, and model fine-tuning.

I am very honored to be the translator of this book, working with editor Liu Meiying to bring this book to Chinese readers.

Taking some time to read this book and systematically understanding the basic principles and capability boundaries of Transformers and large models is like having a map and compass on an adventure journey through large models. This way, we won’t worry about newly released models rendering long-term engineering accumulation useless overnight, and we can develop products for future models. Once the model capabilities are ready, the product can scale up immediately.

I hope this book can become a sightseeing bus in the garden of large models, allowing more people to see the full view of large models. Thus, the ever-expanding capability boundaries of large models become a visual feast rather than a monster devouring everything; we have the opportunity to stand at the forefront of AI, realize more dreams, and gain more freedom.

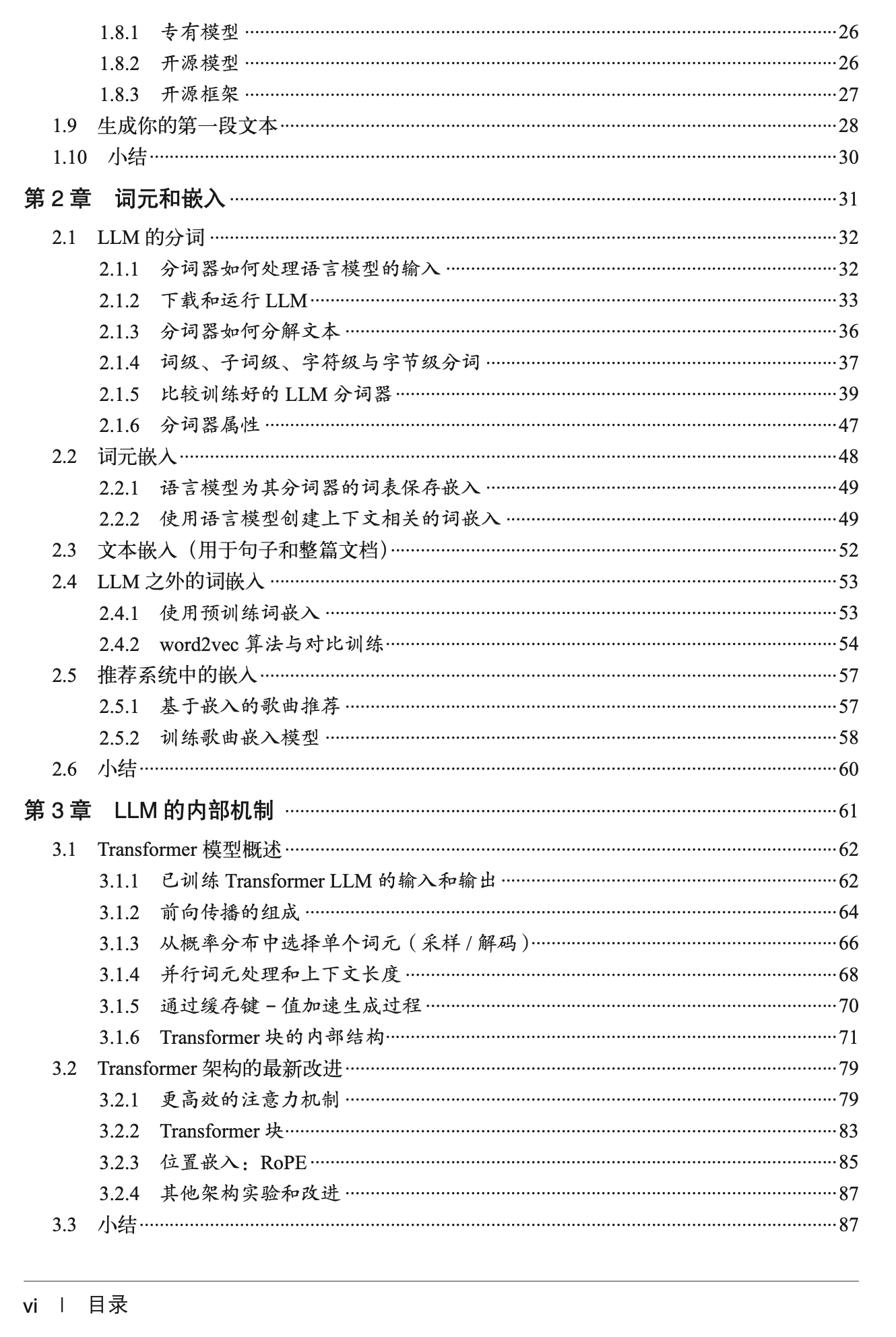

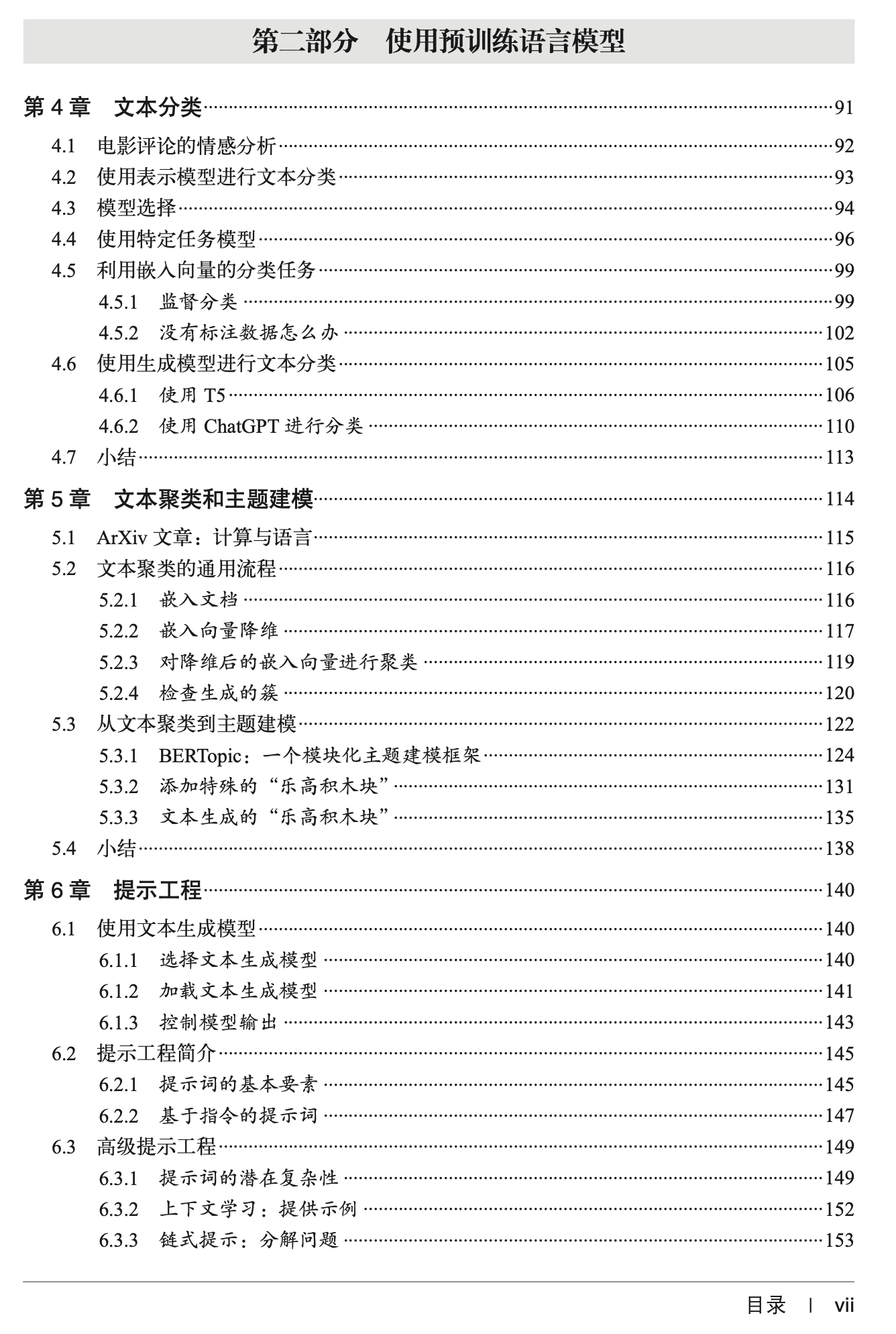

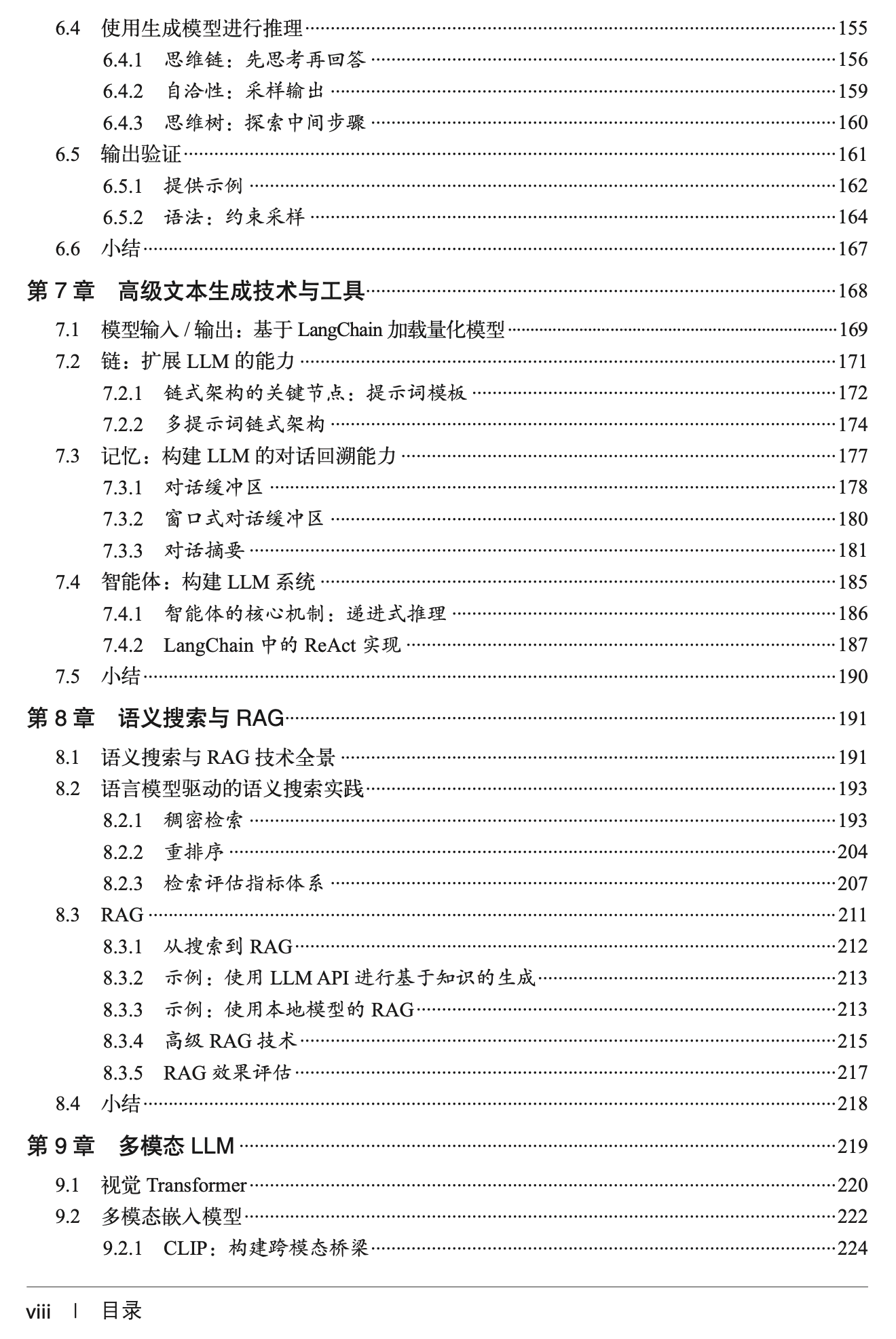

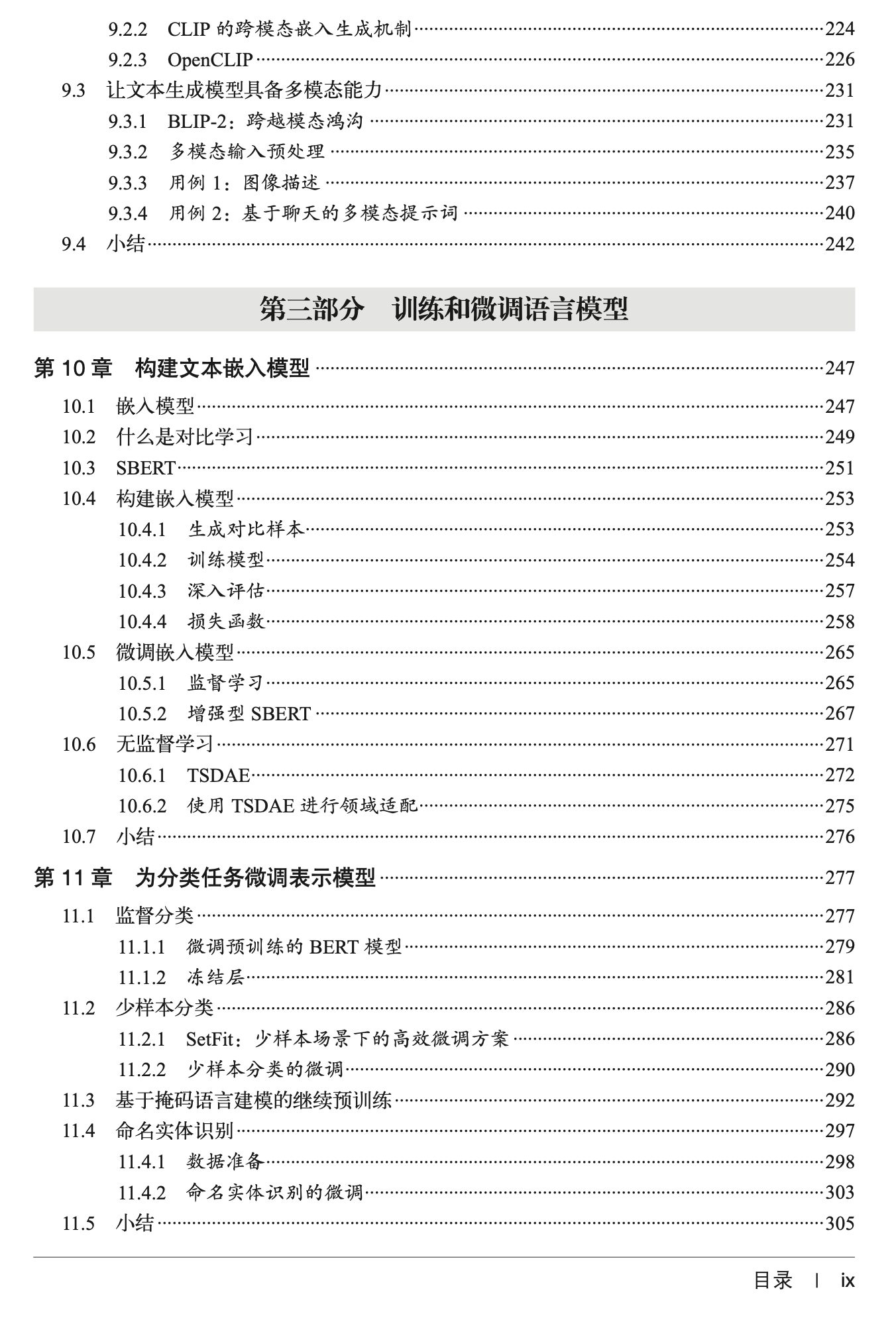

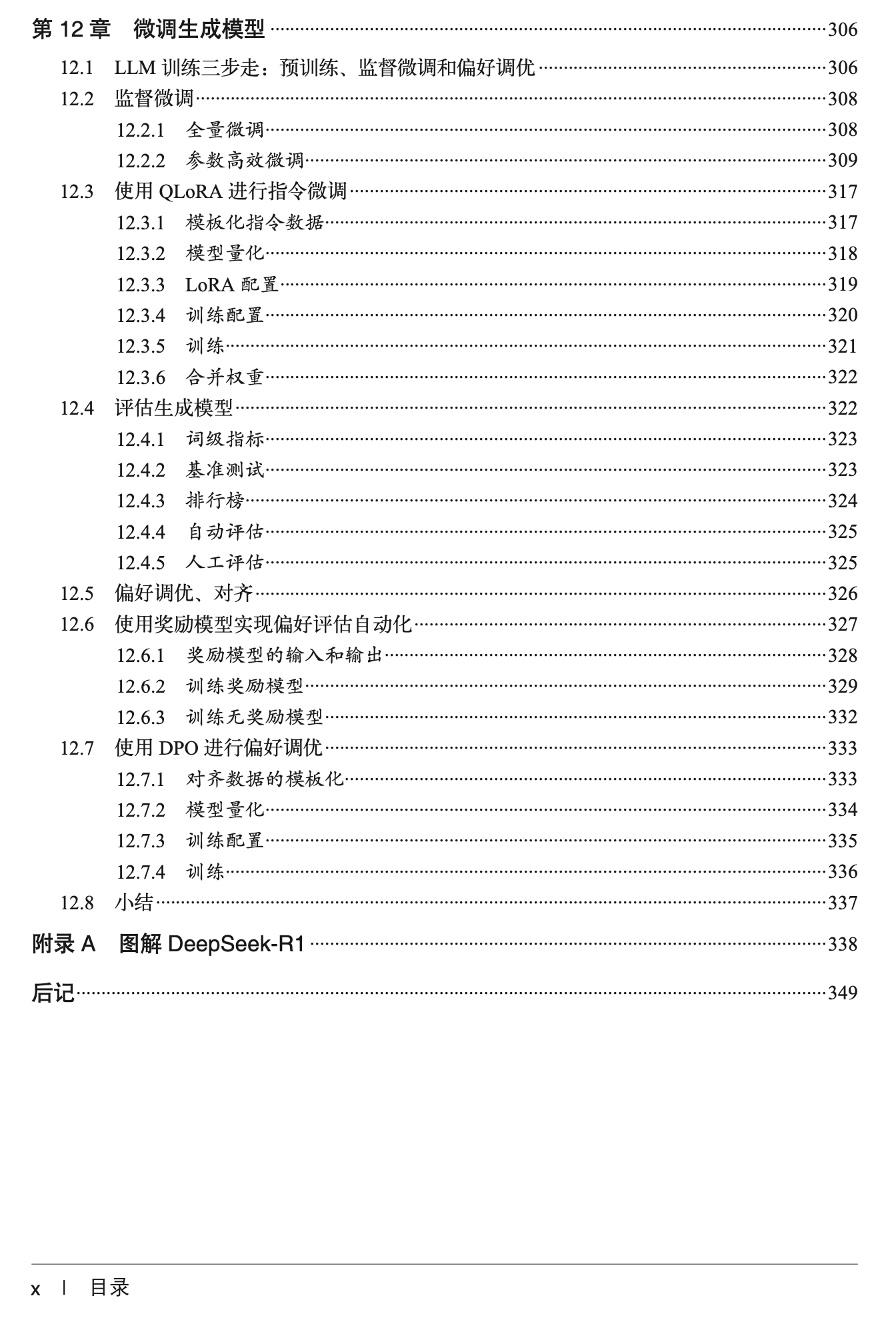

Table of Contents

Author’s Introduction and Cover Introduction

Preface to the Chinese Edition