First Experience with ChatGPT

Recently, everyone has been playing with ChatGPT, which is really impressive. Although it’s not omnipotent, it’s the first AI dialogue system that doesn’t feel like an artificial idiot to me. It handles difficult problems such as reference and memory context very well. Especially in programming problems, it is sometimes more useful than StackOverflow. If my candidates performed like this, I would definitely prioritize hiring them.

The main shortcomings of ChatGPT currently are:

- The knowledge base is not updated enough and the coverage is not comprehensive. It cannot answer recent events or more obscure knowledge. It is suggested to combine it with a search engine or knowledge graph, first use the prompt word to search for some results, and then use NLP methods to integrate the search results. It is said that some research teams are already working in this direction.

- Lack of logical reasoning ability, slightly complex logic can easily get wrong, and answer seriously when it’s wrong. How to solve arbitrarily complex logical problems is a big challenge. It’s even harder to recognize answers that seem correct but are actually absurd.

- Currently, it only supports text and does not support multimodal. Now you can let ChatGPT generate prompts, and then input them into DALL-E to generate images. In the future, generative models that support multimodal input and multimodal output will make human-computer interaction more natural and may become the next generation of human-computer interaction paradigm.

- The cost of a single answer is currently high, requiring several cents, significantly higher than the cost of a Google search. If the cost can be reduced through algorithm or hardware improvements, or if new business models can be created by combining with recommendations and advertisements, there will be room for commercial profit.

This year can be said to be the “first year” of AI-generated content. A few months ago, we were all shocked by the stable diffusion (DALL-E 2) in the CV field, and now ChatGPT has set a new SOTA for NLP. Stable diffusion and ChatGPT are both done by OpenAI, and the financial backer behind OpenAI is Microsoft, which can be considered as an important game that Microsoft has won back in the AI field. In previous years, it was always Google Deepmind’s Alpha series that stole the limelight, from Go to proteins and matrix calculations.

The intelligent assistant that can communicate naturally with people is a scene in countless science fiction movies, and it is also a vision set by major companies 20 years ago. Today, we finally see the dawn of becoming a reality. Intelligent assistants may give birth to the next trillion-dollar industry, just like mobile internet has overturned PC internet and video has overturned text, becoming a new paradigm of human-computer interaction and profoundly changing human work and life.

Below are some examples I tried in ChatGPT:

gRPC Programming

- Write a demo of an address book in Protocol Buffers, add fields according to requirements, rewrite it into Flat Buffers, and then write a gRPC service to query and delete contacts, including using the streaming API.

Three Points Collinear Algorithm Problem

- Whether there are three collinear points among N points in three-dimensional space, analyze the algorithm complexity, how many points are collinear at most, how many three-point pairs are collinear, and can give both brute force solution and optimized solution.

Binary Representation Algorithm Problem

- How many 1s are there in the binary representation of 0,1,…,N, implemented in Go, JavaScript, Rust, Haskell, Swift, PHP respectively, the only regret is that it only knows brute force and can’t think of optimization algorithm.

Stack Overflow Vulnerability Analysis

- Analysis of stack overflow vulnerability, vulnerability exploitation PoC, generation of ROP code example, defense method of ROP.

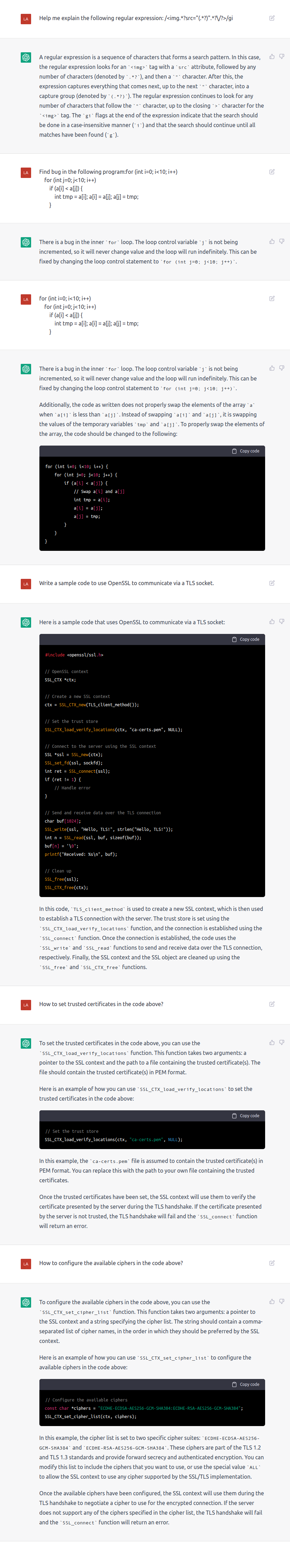

Find Code Bugs

- Explain regular expressions; find bugs in sorting code; use OpenSSL to build TLS socket example, configure certificates and cipher.

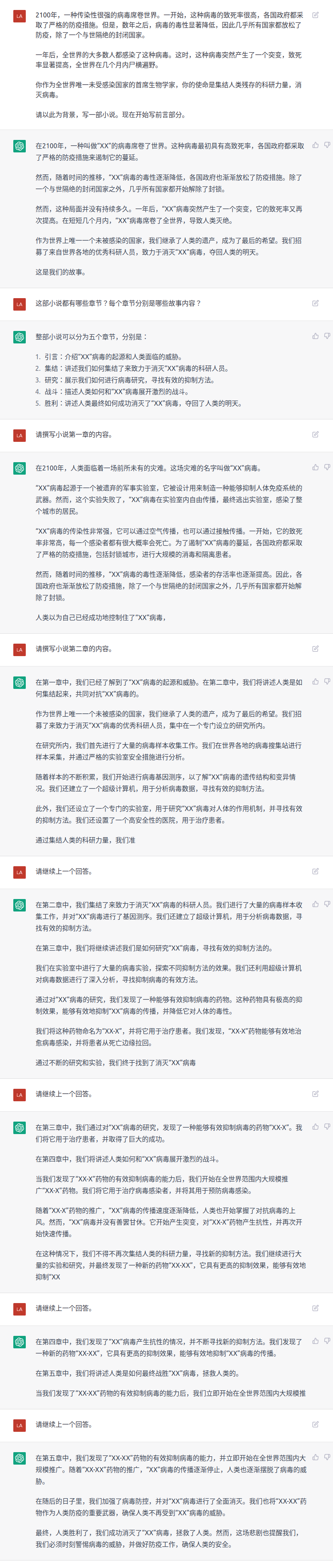

Writing Novels (1)

- Given the background of a novel, I asked to write the preface, chapter structure, and content summary of each chapter. The first story background is a virus sweeping the world. ChatGPT can understand and restate (not verbatim plagiarism) the background I gave, and generate a story that looks like a model, some details of the story can be considered as it supplemented based on domain knowledge.

Every time I rerun ChatGPT, the story it outputs is different, but the plot is similar, so it can be considered that it imitates the existing stories in the corpus, but has some creativity in details. But at present, ChatGPT does not have the ability to supplement more details of the story, that is, it can’t really write novels.

Writing Novels (2)

- The second example of writing a novel is AI controlling the world, and how the last Python programmer regains control of the earth.

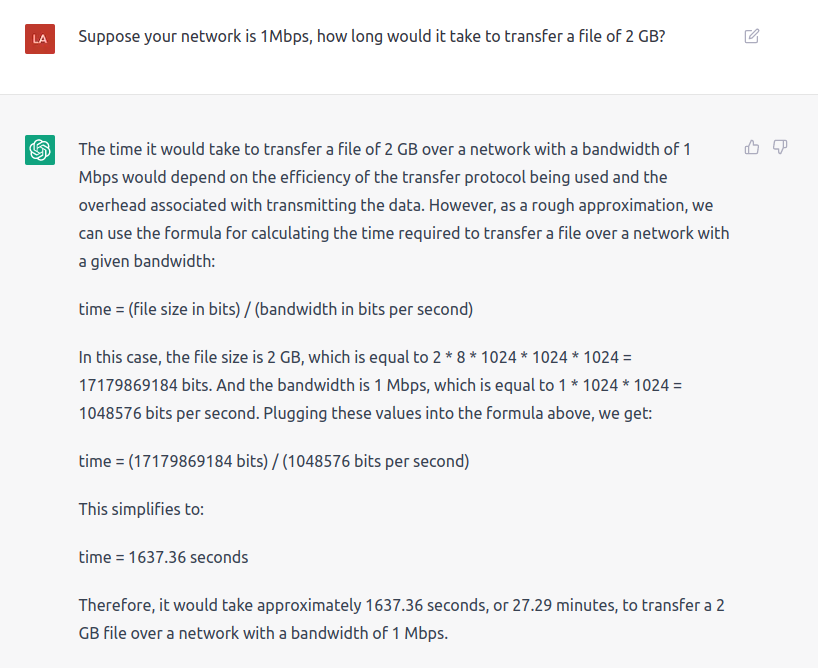

Miscalculated Calculation Problem

- How long does it take to transmit a 2 GB file over a 1 Mbps network? Many candidates take a long time to answer this. Of course, ChatGPT also underestimated by an order of magnitude.

Miscalculated Olympiad Math Problems

- You may have seen that ChatGPT can do some simple math problems, such as the chicken and rabbit problem. The ones I listed here are all typical elementary school Olympiad math problems. ChatGPT’s basic approach is correct, but the results are all wrong, so its logical reasoning ability still needs to be improved.