Open Source Technology Event LinuxCon Debuts in China, Gathering Industry Giants to Focus on Industry Trends

(Reposted from Microsoft Research Asia)

From June 19-20, 2017, the open source technology event LinuxCon + ContainerCon + CloudOpen (LC3) was held in China for the first time. The two-day agenda was packed, including 17 keynote speeches, 88 technical reports from 8 sub-venues, and technical exhibitions and hands-on experiments from companies like Microsoft. LinuxCon attracted many international and domestic internet giants, telecom giants, and thousands of industry professionals, including Linux founder Linus Torvalds, to gather and focus on industry trends.

SDN/NFV: Two Pillars Building the Future Network

At this LC3 conference, the construction of future networks was discussed, and the most frequently mentioned keywords were SDN (Software Defined Networking) and NFV (Network Function Virtualization). Traditional networks are a “black box”, not flexible enough in management, not supporting large enough network scales, and lacking in visibility and debuggability of network status. With the rise of cloud computing, Software Defined Networking (SDN) and Network Function Virtualization (NFV) are dedicated to solving the “black box” problem. SDN’s southbound interface unifies the diverse network device APIs, and the northbound interface can provide a global view of the network, facilitating centralized operations. NFV is about using software to implement network functions such as firewalls, load balancing, virtual network tunnels, etc., making network functions more flexible.

At the conference, cloud computing and 5G giants pointed out new reasons for using SDN and NFV: centralized scheduling of heterogeneous network demands. Whether it’s cloud services or the 5G telecom network for the Internet of Things, the demands of customers and applications are diverse, some need high bandwidth, some need low latency, some need high stability. This requires the infrastructure of cloud computing platforms and 5G telecom networks to support multiple virtual networks on a single physical network, and provide different Quality of Service (QoS) guarantees for different virtual networks. Only software-defined network management, network functions, and network scheduling can flexibly respond to the heterogeneous network demands of customers and applications.

Open Network Ecosystem

Open Network Ecosystem

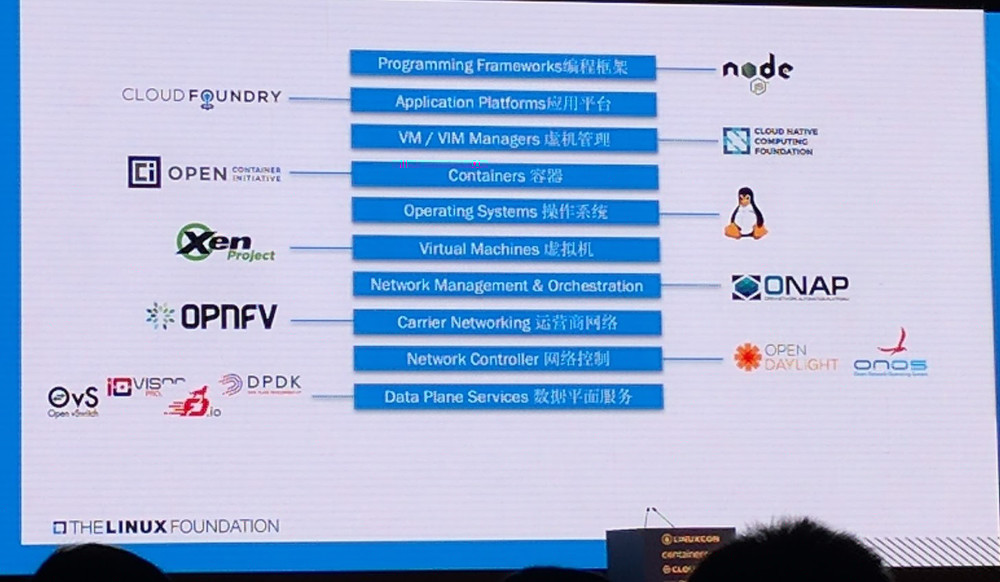

With the widespread adoption of SDN and NFV in the industry, ecosystem and sharing are becoming increasingly important, and open source projects for network management and network functions such as ONAP, ODL, OPNFV are attracting attention from many companies.

The growing gap between the rapidly increasing network speed and the processing capability of the Linux network protocol stack is becoming more and more obvious, and the Linux network protocol stack also has instability in latency. Several technical reports mentioned using Intel DPDK, eBPF and other technologies to improve the throughput and latency stability of the data plane. In the report from the Open vSwitch development team, they specifically mentioned using P4 as a unified SDN programming language on CPUs and programmable switches, to alleviate the burden of developing OVS functions in C language. The DPDK development team proposed a cryptodev framework based on fd.io, using Intel QuickAssist and other hardware acceleration devices to accelerate packet encryption and decryption, and achieved a single-machine 40Gbps line-speed IPSec gateway using asynchronous and vector packet processing. Azure Cloud uses SR-IOV and FPGA acceleration to provide a single virtual machine with 25Gbps of network throughput and 10 times lower latency than software virtual switches.

The performance of SDN controllers for large-scale virtual networks is a topic of common concern in the industry. For example, an Alibaba Cloud data center has more than 100,000 servers, each reporting a heartbeat once per second; if the power goes out and then is restored in the data center, how long would it take to initialize the configuration of 100,000 switches; virtual machine migration is constantly happening, all handled by the SDN controller, leading to high load. Alibaba Cloud’s solution is to do configuration caching, each server uses a method similar to ARP to self-learn network configuration, reducing the load on the SDN controller; using UDP, custom high-performance TCP protocol stack to accelerate the SDN controller.

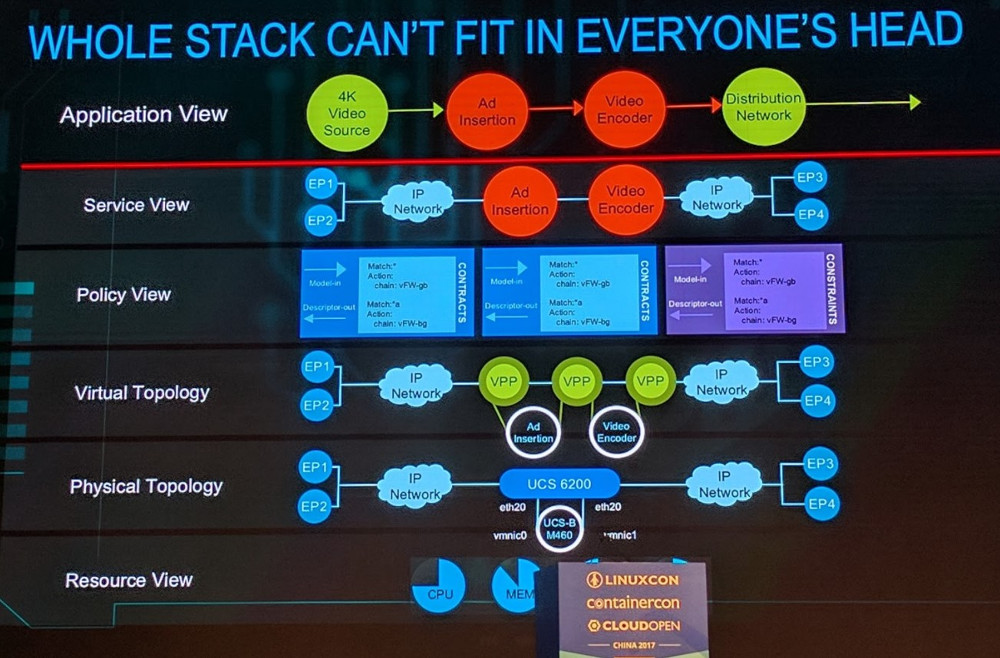

There are many levels of virtual networks, abstraction and encapsulation are needed for understanding

There are many levels of virtual networks, abstraction and encapsulation are needed for understanding

Traditionally, SDN controllers are only responsible for config, provisioning. Cisco proposed that real-time data analysis (such as hardware-supported packet path tracking, statistics, and sampling capabilities), end-to-end resource management, and identity-based policy support should also be added, so that developers do not have to worry about the structure of virtual and physical networks, but can directly specify the requirements of the application. Alibaba Cloud also emphasized the debugging and operation capabilities of virtual networks, relying on the SDN controller to realize the softwareization and APIization of packet capture, that is, the upper layer inputs user and virtual machine information, and the lower layer automatically colors and mirrors related packets.

The concept of software definition is not only in the network, but also practiced in the storage field. Huawei’s OpenSDS transforms the traditional administrator’s perspective of command-line configuration API into a unified, simple, and meaningful YAML configuration file, improves non-automated configuration that relies on storage professional knowledge into policy-based automated business orchestration, and allows applications to reflect differentiated advanced features on the cloud platform.

Microservices: The Most Flexible Choice for Development and Management

Traditional script-based software installation and deployment can easily lead to complex dependency problems and inconsistent runtime environments. Modern services should be split into a combination of multiple microservices. Container technology, represented by Docker, is currently the most widely used carrier for microservices.

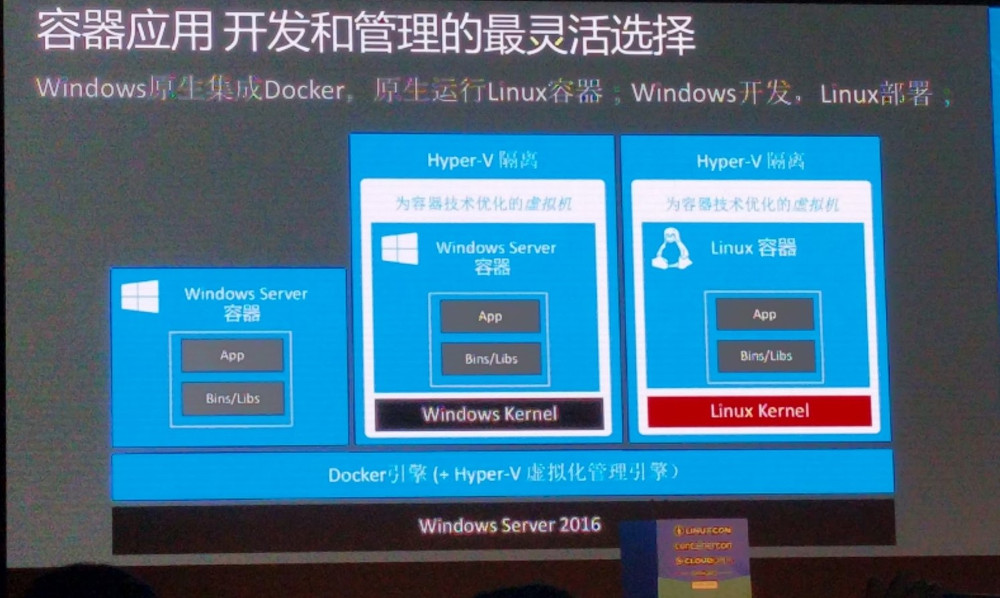

Three types of container deployment supported by Windows

Three types of container deployment supported by Windows

A container is a tool for software encapsulation, deployment, and distribution. Containers can run in the Linux kernel namespace, on customized operating systems such as CoreOS, Atomic, or in semi-virtualized or fully virtualized virtual machines. The Windows system also supports three types of container deployment: native Linux containers based on the Linux subsystem, Linux containers based on Hyper-V virtual machines, and Windows containers based on Hyper-V virtual machines. In fact, due to the maturity of hardware-assisted virtualization technologies such as EPT and SR-IOV, the virtualization overhead brought by VM-based containers is acceptable and provides better isolation.

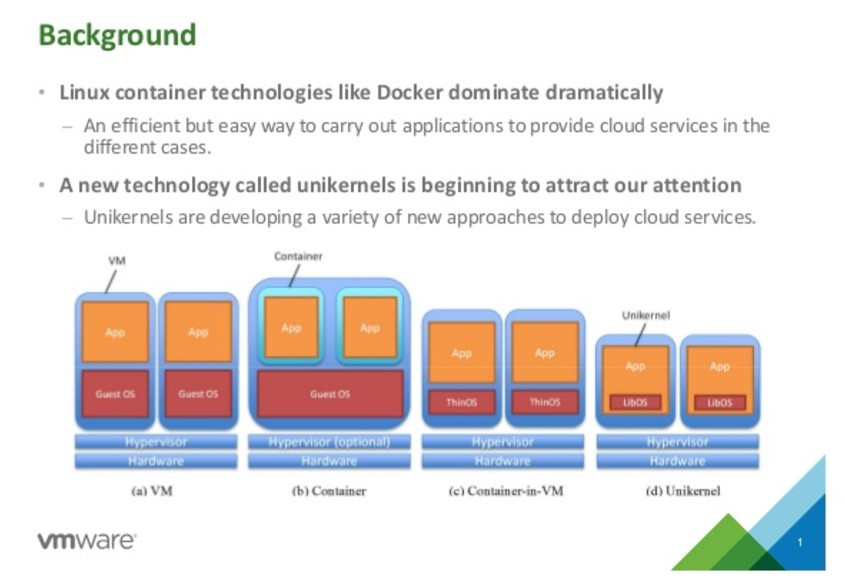

Comparison of virtual machines, containers, VM containers, Unikernel

Comparison of virtual machines, containers, VM containers, Unikernel

In addition to containers, Unikernel is also an efficient solution for deploying microservices. Since the hypervisor already provides resource management, scheduling, and isolation, if only one task is running in the virtual machine, why do we need the kernel in the virtual machine? Applications in the virtual machine can directly access the hypervisor through a set of libraries. MirageOS, jointly developed by the University of Cambridge and the Docker community, is a library OS written in OCaml, providing a clean-slate design type-safe API, supporting Xen, KVM virtual machines, and x86, ARM architectures. For simpler microservices, Unikernel is more efficient; for microservices with more complex dependencies, you can use LinuxKit to streamline existing containers.

Container technology stack

Container technology stack

The main performance challenge brought by microservices is: the granularity of microservices is much smaller than traditional virtual machines, the number of containers is large, and the life cycle is short. Red Hat cited statistics from New Relic, 46% of containers survive less than an hour, and 25% of containers survive less than 5 minutes. Google containerizes most of its internal services and manages them with Kubernetes, needing to create 2 billion new containers every week, which means an average of more than 3,000 new containers per second, each container needs an IP address, which poses scalability challenges to the container orchestration system (orchestrator) and virtual network management system. In addition, for the same number of physical servers, the number of containers is an order of magnitude more than virtual machines, which brings challenges to monitoring and log analysis.

After traditional services are split into microservices, and each microservice is deployed as a container, the number of containers running on each physical machine is large, and communication between containers is frequent. The network communication of containers and the context switching between containers will become performance bottlenecks. Red Hat proposed a method of pre-creating containers, maintaining a container pool to share the overhead of container creation and destruction; in terms of network, the overlay network for container interconnection needs to use hardware-accelerated MACVLAN, IPVLAN and other tunnel protocols and IPSec, MACSec and other encryption and decryption. In virtual machine networks, SR-IOV is usually used to expose virtual machines to the VF accelerated by the physical network card. However, the number of containers is usually much larger than that of virtual machines, and the network card cannot virtualize enough VFs for containers. The throughput and latency stability of the Linux bridge used by Docker by default cannot meet the requirements, and the industry often uses DPDK and other high-performance data planes to solve the communication problem between containers.

Various network access models

Various network access models

The interfaces for applications in containers to access the network include DPDK, socket, custom protocol stack, etc.; the overlay level for container communication and convergence includes OVS, Linux bridge, SR-IOV passthrough, etc.; there are various drivers for physical network cards. Different network access models have different trade-offs between performance, flexibility, and compatibility. Huawei Cloud Network Laboratory proposed the iCAN container network framework, simplifying the programming of various container network data paths, control policies, and quality of service requirements, and supporting multiple container network orchestrators such as flannel, OpenStack.

Container technology is committed to the reliable deployment of microservices, and the compilation process from microservice source code to binary also needs to ensure reliability, which is reproducible build. Reproducible builds use input checksums, ordered compilation processes, consistent compilation environment version numbers, elimination of uninitialized memory, disguise timestamps, cleaning file attributes and other technologies to ensure that the generated software package is byte-by-byte completely determined. Distributions such as Debian have already adopted reproducible build technology.

GPU virtualization: sharing, security, efficiency

Linus Torvalds (left) and Dirk Hohndel (right) in conversation

Linus Torvalds (left) and Dirk Hohndel (right) in conversation

The conversation between Linus Torvalds, the founder of Linux and Git and the spiritual leader of open source software, and Dirk Hohndel, VP of VMWare, was the highlight of the LC3 conference. One of Dirk’s questions was, if you were to start today, what project would you prepare to do. Linus answered that in the era when he created Linux, programming computer hardware had become much easier than in his childhood. Linus joked that he is not a hardware expert because he always breaks hardware. Today, Linus might consider doing FPGA if he is interested in chip design, because the development cost of FPGA is now much lower; or Raspberry Pi, which can do some cool little things.

In the wave of combining cloud and machine learning, the scheduling of GPU virtualization and GPU containers brings new technical challenges. GPUs can be bound to the virtual machine through PCIe pass-through, and cloud services like Azure use this technology to achieve efficient GPU access for virtual machines. When multiple virtual machines need to share a GPU, vSphere and Xen support splitting a physical GPU’s cores and allocating them to multiple virtual machines, but the performance is lower when using hypervisor virtual device forwarding. Intel’s GVT and NVIDIA’s GPUvm technology can virtualize a GPU into multiple vGPUs, and vGPUs can be allocated to different virtual machines to achieve spatial multiplexing of physical GPUs. To achieve time-division multiplexing of GPUs, Intel proposed GPU namespaces and control groups, and the graphics card driver in the container kernel schedules the computing tasks of each container to the GPU according to priority and time slice.

Intel GVT-gGPU virtualization technology

Intel GVT-gGPU virtualization technology

GPU virtualization is for reusing GPUs to solve multiple small computing problems (one-to-many), and some large-scale computing problems require multiple GPUs, which requires scheduling interconnected GPU virtual machines or containers (many-to-one). GPUs can communicate through NVLink, PCIe, or GPUDirect RDMA, which is much more efficient than communicating through the CPU’s network protocol stack. The ensuing challenges are who will tunnel encapsulation and access control for GPUDirect RDMA’s data packets in a virtual network environment; how to reflect the requirements of GPU communication in the scheduling algorithm of virtual machines and containers.

Microsoft: Dancing with Open Source

Open source software supported by Azure

Open source software supported by Azure

Cloud computing platforms are also breaking company boundaries and embracing open source and open technologies. Microsoft mentioned in the keynote speech that more than 60% of the virtual machines in the China region of the Azure public cloud platform run Linux, and 30% of the virtual machines worldwide run Linux, a proportion that is increasing year by year. Azure Cloud fully supports open source from operating systems, container orchestration services to databases, programming languages, operation and maintenance frameworks, and applications. Previously, SQL Server, PowerShell, etc., which could only run on Windows, also support Linux. At the same time, Windows is becoming more and more friendly to developers, for example, the Linux subsystem allows Linux development tools to run natively on Windows systems, VisualStudio integrates with Git, and Hyper-V supports Docker containers.