Overview of Virtualization Technology

Note: On November 21, 2014, I shared some of my insights on virtualization technology at the Alibaba Tech Club’s Virtualization Technology Exchange and “USTC Cloud 3.0” launch event. After organizing and supplementing, I discuss with you. (Long text, enter with caution)

Everyone is familiar with virtualization technology, most of us have used virtual machine software such as VMWare, VirtualBox. Some people think that virtualization technology has only become popular in recent years with the trend of cloud computing, and ten years ago it was just a toy for desktop users to test other operating systems. Not really. As long as multiple tasks are running on the computer at the same time, there will be a demand for task isolation, and virtualization is the technology that makes each task appear to monopolize the entire computer and isolate the impact between tasks. As early as the 1960s when computers were still huge, virtualization technology began to develop.

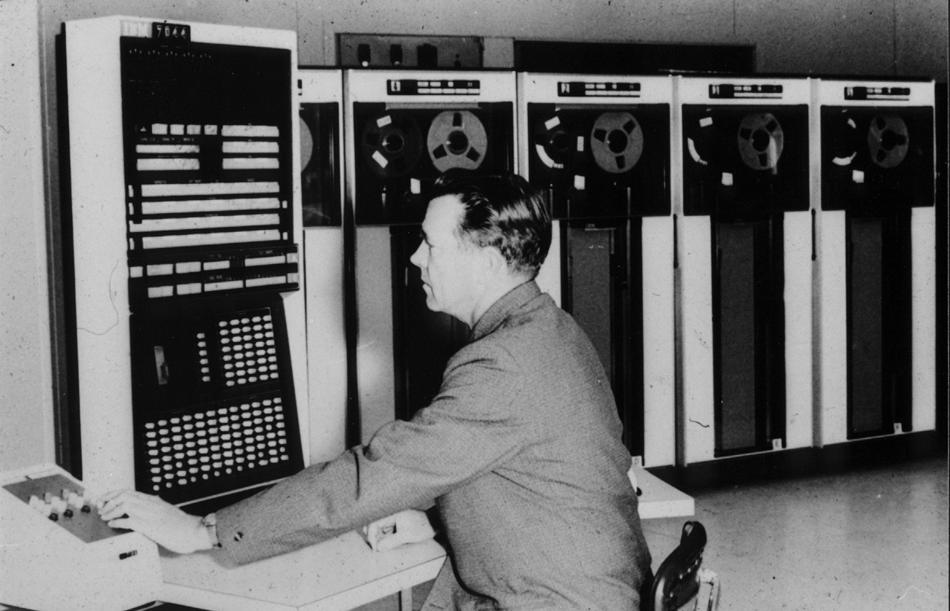

IBM 7044

IBM 7044

Dark History: Hardware Virtualization

The IBM M44/44X in 1964 is considered the world’s first system to support virtualization. It uses dedicated hardware and software to virtualize multiple popular IBM 7044 mainframes on a single physical machine. The virtualization method it uses is very primitive: like a time-sharing system, in each time slice, an IBM 7044 mainframe monopolizes all hardware resources to run.

It is worth mentioning that this prototype system for research not only opened the era of virtualization technology, but also proposed an important concept of “paging” (because each virtual machine needs to use virtual addresses, which requires a layer of virtual address to physical address mapping).

In an era when the concept of “process” has not yet been invented, multitasking operating systems and virtualization technology are in fact inseparable, because a “virtual machine” is a task, and there is no dominant architecture like Intel x86 at that time, and each mainframe is self-governing, and there is no talk of compatibility with other architectures. This “task-level” or “process-level” virtualization, conceptually continues to this day, represented by operating system-level virtualization such as LXC and OpenVZ.

This technology that mainly relies on customized hardware to achieve virtualization is historically known as “hardware virtualization”. In this era where everything has to be “software-defined”, mainframes are declining, and most hardware-dependent virtualization has entered the history museum. The virtualization technology we see today is mostly software-based and hardware-assisted. The division shown in the figure below has no strict boundaries, and a virtualization solution may use multiple technologies in the figure below at the same time, so don’t mind these details~

From Simulated Execution to Binary Translation

When talking about the mainframe era, each major manufacturer has its own architecture and instruction set, why didn’t translation software appear between various instruction sets? You know, Cisco started by being compatible with various network devices and protocols (there are gossips in it, the couple who founded Cisco hoped to use computer networks to transmit love letters, but the network devices are varied, so they invented routers compatible with various protocols).

Cisco’s first router

Cisco’s first router

Translating between network protocols and translating between instruction sets are both mechanical, lengthy, and tedious tasks. It takes unparalleled genius and carefulness to do it right and consider all kinds of corner cases. The trouble with instruction sets compared to network protocols is that the boundaries of instructions (where is the first instruction, where is the last instruction) are unknown, may perform privileged operations (such as restart), and can execute dynamically generated data as code (in the von Neumann architecture, data and code share linear memory space). Therefore, it is impossible to do static translation between instruction sets before binary code runs.

The simplest and crudest solution is “simulated execution”. Open a large array as the memory of the “virtual machine”, take the instruction set manual, write a switch statement with countless cases, judge what instruction is currently to be executed, and simulate execution according to the manual. This is naturally feasible, but the efficiency is not flattering, the virtual machine is at least an order of magnitude slower than the physical machine. The so-called “dynamically typed languages”, such as Python, PHP, Ruby, after being compiled into intermediate code, mostly use this “simulated execution” method, so they can’t speed up. The famous x86 emulator Bochs is a simulated execution, although slow, but good compatibility, and not easy to have security vulnerabilities.

Just because instruction translation is difficult to do, we can’t give up eating because of choking. A for loop that runs 100 million times, if it is all operations like addition and subtraction, translating it into machine code and executing it directly will definitely be faster than simulated execution. Add the word “dynamic” before binary translation, that is, in the process of program execution, the part that can be translated is translated into the machine code of the target architecture for direct execution (and cached for reuse), and the part that cannot be translated falls into the simulator, simulates the execution of privileged instructions, and then translates the code behind. The JIT (Just-In-Time) technology used by Java, .NET, JavaScript, etc. today is also a similar routine.

Some people may ask, if I am only virtualizing the same architecture, such as virtualizing a 64-bit x86 system on a 64-bit x86 system, do I need to do instruction translation? Yes, it is necessary. For example, the virtual machine may read privileged registers GDT, LDT, IDT, and TR, which will not trigger processor exceptions (i.e., the virtual machine manager cannot capture such behavior), but the values of these privileged registers need to be “faked” for the virtual machine. This requires replacing it with a call to the virtual machine manager’s instruction before the instruction to read the privileged register of the virtual machine is executed.

Unfortunately, in the era of mainframes, the genius Fabrice Bellard who could do dynamic binary translation well was just born (1972).

Fabrice Bellard

Fabrice Bellard

Fabrice Bellard’s QEMU (Quick EMUlator) is currently the most popular virtualization software using dynamic binary translation technology. It can simulate x86, x86_64, ARM, MIPS, SPARC, PowerPC and other processor architectures, and run the operating systems on these architectures without modification. When we enjoy the fun of audio and video, when we smoothly run virtual machine systems of various architectures, we should not forget the creator of ffmpeg and qemu, the great god Fabrice Bellard.

Taking the Intel x86 architecture we are most familiar with as an example, it is divided into four privilege levels 0~3. Generally, the operating system kernel (privileged code) runs in ring 0 (highest privilege level), and user processes (non-privileged code) run in ring 3 (lowest privilege level). (Even if you are root, your process is also in ring 3! Only when you enter the kernel is it ring 0, supreme power means great responsibility, there are many restrictions on kernel programming, just complaining)

After using the virtual machine, the virtual machine (Guest OS) runs in ring 1, and the host operating system (VMM, Virtual Machine Monitor) runs in ring 0. For example, installing a Linux virtual machine on Windows, the Windows kernel runs in ring 0, and the virtualized Linux kernel runs in ring 1, and the applications in the Linux system run in ring 3.

When the virtual machine system needs to execute privileged instructions, the virtual machine manager (VMM) will immediately capture it (who makes ring 0 higher than ring 1’s privilege level!) and simulate the execution of this privileged instruction, and then return to the virtual machine system. In order to improve the performance of system calls and interrupt handling, sometimes the technology of dynamic binary translation is used to replace these privileged instructions with instructions calling the virtual machine manager API before running. If all privileged instructions are seamlessly simulated, the virtual machine system is like running on a physical machine, and it is completely impossible to find that it is running in a virtual machine. (Of course, there are still some flaws in reality)

Please help from the virtual machine system and CPU

Although dynamic binary translation is much faster than simulation execution, there is still a significant gap from the performance of the physical machine because every privileged instruction has to go around in the virtual machine manager (simulation execution). To speed up the virtual machine, people have thought of two methods:

- Let the virtual machine operating system help, the so-called “paravirtualization” or OS-assisted virtualization

- Let the CPU help, the so-called “hardware-assisted virtualization”

These two methods are not mutually exclusive. Many modern virtualization solutions, such as Xen, VMware, use both methods at the same time.

Paravirtualization

Since the difficulty and performance bottleneck of dynamic binary translation lies in simulating the execution of those various privileged instructions, can we modify the kernel of the virtual machine system to make those privileged instructions look better? After all, in most cases, we don’t need to deliberately “hide” the existence of the virtualization layer to the virtual machine, but to provide necessary isolation between virtual machines, without causing too much performance overhead.

The prefix of Paravirtualization is para-, which means “with” or “alongside”. That is, the virtual machine system and the virtualization layer (host system) are no longer strictly superior and inferior, but a relationship of mutual trust and cooperation. The virtualization layer needs to trust the virtual machine system to a certain extent. In the x86 architecture, both the Virtualization Layer and the kernel of the virtual machine system (Guest OS) run in ring 0.

The kernel of the virtual machine system needs to be specially modified to change the privileged instructions into calls to the virtualization layer API. In modern operating systems, since these architecture-related privileged operations are all encapsulated (for example, the arch/ directory in the Linux kernel source code), this modification of the virtual machine kernel source code is simpler than binary translation that needs to consider various corner cases.

Compared with full virtualization using binary translation, paravirtualization sacrifices universality for performance, because any operating system can run on a full virtualization platform without modification, and each paravirtualized operating system kernel has to be manually modified.

Hardware-Assisted Virtualization

The same function, when implemented with dedicated hardware, is almost always faster than when implemented with software. This is almost a golden rule. In the matter of virtualization, if Bill can’t handle it, naturally, he would ask Andy for help. (Bill is the founder of Microsoft, and Andy is the founder of Intel)

The concept of hardware assisting software in implementing virtualization is not new. As early as 1974, the famous paper Formal requirements for virtualizable third generation architectures proposed three basic conditions for a virtualizable architecture:

The virtual machine manager provides a virtual environment identical to the real machine;

The program running in the virtual machine is not much slower than the physical machine in the worst case;

The virtual machine manager can fully control all system resources.

- The program in the virtual machine cannot access resources not allocated to it

- In some cases, the virtual machine manager can reclaim resources already allocated to the virtual machine

The early x86 instruction set did not meet these conditions, so the virtual machine manager needed to do complex dynamic binary translation. Since the main overhead of binary translation is “capturing” the privileged instructions of the virtual machine system, what the CPU can do is to help “capture”. Intel’s solution is called Virtual Machine Control Structures (VT-x), which was launched in the winter of 2005; AMD did not want to be left behind and subsequently launched a similar Virtual Machine Control Blocks (AMD-V).

Intel added a “Root Mode” for the exclusive use of the virtual machine manager (VMM) on top of the original four privilege levels. This is like the fearless Monkey King (ring 0) cannot escape from the palm of the Buddha (Root Mode). In this way, although the virtual machine system runs in ring 0, its executed privileged instructions will still be automatically captured (triggering an exception) by the CPU, falling into the Root Mode of the virtual machine manager, and then returning to the virtual machine system using the VMLAUNCH or VMRESUME instructions.

To facilitate the use of API calls to replace CPU exception capture in paravirtualization, Intel also provides a “system call” from the virtual machine (Non-root Mode) to the virtual machine manager (Root Mode): the VMCALL instruction.

It looks great, doesn’t it? In fact, for CPUs that have just started to support hardware-assisted virtualization, using this method may not necessarily perform better than dynamic binary translation. Because the hardware-assisted virtualization mode needs to switch to Root Mode for each privileged instruction, process it, and then return. This mode switch is like switching between real mode and protected mode, requiring the initialization of many registers, as well as saving and restoring the context, not to mention the impact on TLB and cache. However, Intel and AMD engineers are not idle, in newer CPUs, the performance overhead of hardware-assisted virtualization has been done better than dynamic binary translation.

Finally, let’s talk about something practical: in most BIOS, there is a switch option for hardware-assisted virtualization. Only by enabling options like Intel Virtualization Technology can the virtual machine manager use hardware acceleration. So when using a virtual machine, don’t forget to check the BIOS.

CPU Virtualization is not Everything

After talking so much, it gives everyone an illusion: as long as the CPU instructions are virtualized, everything will be fine. The CPU is indeed the brain of the computer, but other components of the computer should not be ignored. These components include memory and hard drives that are with the CPU day and night, graphics cards, network cards, and various peripherals.

Memory Virtualization

When talking about the ancestor of virtualization, IBM M44/44X, it was mentioned that it proposed the concept of “paging”. That is, each task (virtual machine) seems to monopolize all memory space, and the paging mechanism is responsible for mapping the memory addresses of different tasks to physical memory. If there is not enough physical memory, the operating system will swap the memory of infrequently used tasks to external storage such as disks, and load it back when the infrequently used task needs to be executed (of course, this mechanism was invented later). In this way, the developer of the program does not need to consider how large the physical memory space is, nor does it need to consider whether the memory addresses of different tasks will conflict.

The computers we use now all have a paging mechanism. The application program (user mode process) sees a vast virtual memory (Virtual Memory), as if the entire machine is monopolized by itself; the operating system is responsible for setting the mapping relationship from the user mode process’s virtual memory to physical memory (as shown in the VM1 box in the figure below); the MMU (Memory Management Unit) in the CPU is responsible for querying the mapping relationship (the so-called page table) during the running of the user mode program, and translating the virtual address in the instruction into a physical address.

With the introduction of virtual machines, things have become a bit more complicated. Virtual machines need to be isolated from each other, and the operating system of the virtual machine cannot directly see the physical memory. The red part in the above figure, namely “machine memory” (MA), is managed by the virtualization layer, and the “physical memory” (PA) seen by the virtual machine is actually virtualized, forming a two-level address mapping relationship.

Before Intel’s Nehalem architecture (thanks to Jonathan for the correction), the memory management unit (MMU) only knew how to perform memory addressing according to the classic segmentation and paging mechanism, and did not know the existence of the virtualization layer. The virtualization layer needs to “compress” the two-level address mapping into a one-level mapping, as shown by the red arrow in the above figure. The method of the virtualization layer is:

- When switching to a virtual machine, use the memory mapping relationship (page table) of this virtual machine as the page table of the physical machine;

- If the virtual machine operating system tries to modify the CR3 register pointing to the page table (such as switching the page table between processes), it will be replaced with access to the memory address of the “shadow page table” (through dynamic binary translation, or in hardware-assisted virtualization schemes, trigger an exception and fall into the virtualization layer);

- If the virtual machine operating system tries to modify the content of the page table, such as mapping a new physical memory (PA) to a process’s virtual memory (VA), the virtualization layer needs to intercept it, allocate space for the physical address (PA) in the machine memory (MA), and map the virtual memory (VA) in the “shadow page table” to the machine memory (MA);

- If a virtual machine accesses a page and it is swapped out to external storage, it will trigger a page fault, and the virtualization layer is responsible for distributing the page fault to the operating system of the virtual machine.

Every operation on the page table takes a detour from the virtualization layer, which is not a small overhead. As part of hardware-assisted virtualization, Intel introduced EPT (Extent Page Table) technology from the Nehalem architecture, and AMD also introduced NPT (Nest Page Table), allowing the memory management unit (MMU) to support second-level memory translation (Second Level Address Translation, SLAT): Provide another set of page tables for the virtualization layer, first look up the original page table according to the virtual address (VA) to get the physical address (PA), and then look up the new page table to get the machine address (MA). This no longer requires a “shadow page table”.

Some people may worry that the added level of mapping relationship will slow down memory access speed. In fact, whether or not second-level memory translation (SLAT) is enabled, the page table cache (Translation Lookaside Buffer, TLB) will store the mapping from virtual address (VA) to machine address (MA). If the hit rate of TLB is high, the added level of memory translation will not significantly affect memory access performance.

Device Virtualization

Components other than CPU and memory are collectively referred to as peripherals. The communication between the CPU and peripherals is I/O. Each virtual machine needs a hard disk, network, and even graphics card, mouse, keyboard, optical drive, etc. If you have used a virtual machine, you should be familiar with these configurations.

Virtual device configuration in Hyper-V

Virtual device configuration in Hyper-V

There are generally three ways of device virtualization:

- Virtual device, shared use

- Direct assignment, exclusive access

- With the help of physical devices, virtualize multiple “small devices”

Let’s take the network card as an example to see what the above three device virtualization methods are like:

- The most classic method: For each virtual machine, a network card that is independent of the physical device is virtualized. When the virtual machine accesses the network card, it will fall into the virtual machine manager (VMM). This virtual network card seems to have A/B two sides, A side is inside the virtual machine, and B side is in the virtual machine manager (host). The data packets sent from side A will be sent to side B, and the data packets sent from side B will be sent to side A. There is a virtual switch in the virtual machine manager, which forwards between the B side of each virtual machine and the physical network card. Obviously, the virtual switch implemented purely in software is the performance bottleneck of the system.

- The most extravagant method: Assign a real physical network card to each virtual machine, and map the PCI-E address space of the network card to the virtual machine. When the virtual machine accesses the PCI-E address space of this network card, if the CPU supports I/O virtualization, the I/O MMU in the CPU will map the physical address (PA) to the machine address (MA); if the CPU does not support I/O virtualization, it will trigger an exception and fall into the virtual machine manager, and the virtual machine manager software completes the translation from the physical address to the machine address, and issues the real PCI-E request. When the CPU supports I/O virtualization, the virtual machine accesses the network card without going through the virtualization layer, and the performance is relatively high, but this requires each virtual machine to monopolize a physical network card, and only the rich can afford it.

- The most fashionable method: The physical network card supports virtualization, can virtualize multiple Virtual Functions (VF), the virtualization manager can assign each Virtual Function to a virtual machine, the virtual machine uses a customized driver program, and can see an independent PCI-E device. When the virtual machine accesses the network card, it also directly translates the address through the CPU’s I/O MMU, without going through the virtualization layer. The physical network card has a simple switch inside, which can forward between Virtual Functions and the connected network cable. This method can achieve high performance with a single network card, but it requires a higher-end network card, requires the CPU to support I/O virtualization, and requires the use of customized driver programs in the virtual machine system.

The I/O MMU is a hardware component that allows peripherals and virtual machines to communicate directly, bypassing the Virtual Machine Manager (VMM), and improving I/O performance. Intel calls it VT-d, and AMD calls it AMD-Vi. It can translate physical addresses (PA) in device registers and DMA requests into machine addresses (MA), and it can also distribute interrupts generated by devices to virtual machines.

It’s practical time again: like hardware-assisted virtualization, the I/O MMU also has a switch in the BIOS, don’t forget to turn it on~

Device virtualization also has “full virtualization” and “paravirtualization”. If the driver in the virtual machine system can be modified, it can directly call the API of the Virtual Machine Manager (VMM), reducing the overhead of simulating hardware devices. This behavior of modifying the driver in the virtual machine to improve performance belongs to paravirtualization.

By installing additional drivers in the virtual machine system, some interactions between the host and the virtual machine (such as sharing the clipboard, dragging and dropping files) can be achieved. These drivers will load hooks in the virtual machine system and use the API provided by the virtualization manager to interact with the host system.

Installing additional drivers in the virtual machine

Installing additional drivers in the virtual machine

To summarize, you can go for a cup of tea and savor:

- According to the implementation of the virtualization manager, it is divided into hardware virtualization and software virtualization, and hardware virtualization has exited the historical stage;

- According to whether the virtual machine operating system needs to be modified, it is divided into full virtualization (Full Virtualization) and paravirtualization (Paravirtualization);

- According to how to handle privileged instructions, it is divided into simulation execution (very low efficiency), binary translation (QEMU), and hardware-assisted virtualization (KVM, etc.).

Operating System Level Virtualization

Many times, we don’t want to run any operating system in a virtual machine, but hope to achieve a certain degree of isolation between different tasks. The virtualization technology mentioned earlier, each virtual machine is an independent operating system, with its own task scheduling, memory management, file system, device drivers, etc., and will run a certain number of system services (such as refreshing disk buffers, log recorders, timed tasks, ssh servers, time synchronization services), these things will consume system resources (mainly memory), and the two-layer task scheduling, device drivers, etc. of the virtual machine and the virtual machine manager will also increase the time overhead. Can virtual machines share the operating system kernel while maintaining a certain degree of isolation?

Why two rivers enter one canal. In order to facilitate development and testing, the seventh edition of UNIX in 1979 introduced the chroot mechanism. chroot is to let a process use a specified directory as the root directory. All its file system operations can only be performed in this specified directory, so it will not harm the host system. Although chroot has classic escape vulnerabilities, and it does not isolate resources such as processes and networks at all, chroot is still used to build and test software in a clean environment.

To become a real virtualization solution, file system isolation alone is not enough. The other two important aspects are:

- Isolation of namespaces such as processes, networks, IPC (inter-process communication), users, etc. The virtual machine can only see its own processes, can only use its own virtual network card, will not interfere with the outside when inter-process communication, and the UID/GID inside the virtual machine is independent of the outside.

- Resource limitation and auditing. The program in the virtual machine cannot “run away” and occupy all the CPU, memory, hard disk and other resources of the physical machine. It must be able to count how much resources the virtual machine occupies and be able to limit resources.

The above two things are what the BSD and Linux communities have been gradually doing since entering the 21st century. In Linux, the isolation of namespaces is called user namespaces. When creating a process, new namespaces are created by specifying the parameters of the clone system call; resource limitation and auditing are done by cgroups, and its API is located in the proc virtual file system.

This virtualization solution where one or more processes run in a virtual machine and the virtual machine shares a kernel with the host is called “operating system-level virtualization” or “task-level virtualization”. Since Linux Containers (LXC) was included in the kernel mainline from Linux 3.8, operating system-level virtualization is also known as “container”. In order to distinguish from the virtualization solution where the virtual machine is a complete operating system, the isolated process (process group) is often not called a “virtual machine”, but a “container”. Since there is no extra layer of operating system kernel, containers are lighter than virtual machines, start faster, have less memory overhead, scheduling overhead, and more importantly, accessing disk and other I/O devices does not need to go through the virtualization layer, without performance loss.

Operating system-level virtualization on Linux did not start with LXC, on the contrary, LXC is a typical “the waves behind the Yangtze River push the waves ahead”. Linux-Vserver, OpenVZ, Parallels Containers are all solutions to implement operating system-level virtualization in the Linux kernel. Although the younger generations have won more attention, as an elder, OpenVZ still has some more “enterprise-level” features than LXC:

- It can audit and limit the disk quota of each container, which is achieved through directory-level disk space auditing (this is also the main reason why freeshell uses OpenVZ instead of LXC);

- Supports checkpoint, live migration;

- The capacity of memory and disk seen in OpenVZ containers by commands like free -m, df -lh is its quota, while what is seen in LXC is the capacity of the physical machine;

- Supports swap space, the behavior of killing processes when OOM (Out Of Memory) occurs is the same as that of physical machines, while LXC will directly fail to allocate memory.

However, because OpenVZ insists on the RHEL route, RHEL 6 is still the old 2.6.32 kernel, RHEL 7 has just been released and OpenVZ has not followed up, the OpenVZ kernel now seems very old, even the new version of systemd can’t run, let alone the cool new features of the 3.x kernel.

OpenVZ Architecture

OpenVZ Architecture

Good Steward of Containers Docker

A good horse needs a good saddle, such a useful Linux container naturally needs a good steward. Where to get system images? How to version control images? Docker is the recently popular Linux container steward, it is said that even Microsoft has shown goodwill to Docker, intending to develop container support for Windows.

Docker is actually born for system operation and maintenance, it greatly reduces the cost of software installation and deployment. The installation of software is a troublesome thing because

There are dependencies between software. For example, Linux depends on the standard C library glibc, depends on the cryptographic library OpenSSL, depends on the Java runtime environment; Windows depends on .NET Framework, depends on Flash player. If each software carries all its dependencies, it would be too bloated. How to find and install the dependencies of the software is a big question, and it is also the characteristic of various Linux distributions.

There are conflicts between software. For example, program A depends on glibc 2.13, while program B depends on glibc 2.14; script A needs Python 3, script B needs Python 2; Apache and Nginx, two web servers, both want to listen to port 80. Conflicting software installed in the same system always tends to bring some chaos, such as the early DLL Hell in Windows. The way to resolve software conflicts is isolation, allowing multiple versions to coexist in the system and providing methods to find matching versions.

Let’s see how Docker solves these two problems:Pack all the dependencies and runtime environment of the software into an image, instead of using complex scripts to “install” software in an unknown environment;

This package containing all dependencies must be large, so Docker’s images are hierarchical, i.e., the image of the application is generally based on the basic system image, and only the incremental part needs to be transmitted and stored;

Docker uses container-based virtualization to run each software in an independent container, avoiding conflicts in file system paths and runtime resources of different software.

Among them, Docker’s hierarchical image structure depends on Linux’s AUFS (Another Union File System), AUFS can merge and mount the base directory A and the incremental directory B into a directory C, C can see the files in A and B at the same time (if there is a conflict, B’s will prevail), and the modifications to C will be written into B. When a Docker container is started, an incremental directory will be generated and merged and mounted with the Docker image as the base directory, and all write operations of the Docker container will be written into the incremental directory, without modifying the base directory. This achieves file system “version control” at a relatively low cost and reduces the distribution volume of software (only the incremental part needs to be distributed, the base image is what most people already have).

Relationship between Docker, LXC and kernel components

Relationship between Docker, LXC and kernel components

Docker’s virtualization is based on libcontainer (the picture above is a bit old, it was based on LXC at that time). Both libcontainer and LXC are actually based on the resource auditing of cgroups provided by the Linux kernel, chroot file system isolation, namespace isolation and other mechanisms.

Interlude: How Freeshell Starts

We know that freeshell uses OpenVZ virtualization technology, people often ask if they can change the kernel, and some people ask where the freeshell system starts from. In fact, like a regular Linux system, freeshell starts from /sbin/init in the virtual machine (is it process 1?), and then it’s the startup process of the virtual machine system itself.

A corner of the Freeshell control panel

A corner of the Freeshell control panel

In fact, different types of virtualization technologies start booting the virtual machine system from different places:

- Starting from the simulated BIOS, supporting MBR, EFI, PXE and other boot methods, such as QEMU, VMWare;

- Starting from the kernel, the virtual machine image does not contain the kernel, such as KVM, Xen;

- Starting from the init process, the virtual machine is a container that shares the kernel with the host, and will start various system services according to the boot process of the operating system, such as LXC, OpenVZ;

- Only running a specific application or service, also based on containers, such as Docker.

Cloud Operating System OpenStack

What we talked about before are specific technologies to implement virtualization. But to let end users use virtual machines, there needs to be a platform to manage virtual machines, or a “cloud operating system”. OpenStack is currently the most popular cloud operating system.

OpenStack Cloud Operating System

OpenStack Cloud Operating System

OpenStack (or any reliable cloud operating system) needs to virtualize various resources in the cloud, and its management component is called Nova.

- Computing: The virtualization technology mentioned in this article is the virtualization of computing. OpenStack can use a variety of virtualization solutions, such as Xen, KVM, QEMU, Docker. The management component Nova decides which physical machine to schedule the virtual machine to based on the load of each physical node, and then calls the API of these virtualization solutions to create, delete, boot, shut down, etc.

- Storage: If the virtual machine image can only be stored locally on the computing node, it is not only not conducive to data redundancy, but also not conducive to the migration of virtual machines. Therefore, in the cloud, a logically centralized and physically distributed storage system is generally adopted, which is independent of the computing node, that is, the access of the computing node to the data disk is generally through network access.

- Network: Each customer needs to have their own virtual network. How to prevent the virtual networks of different customers from interfering with each other on the physical network is the matter of network virtualization. See my other blog post “Overview of Network Virtualization Techniques“.

In addition to the core virtualization manager Nova, OpenStack also has many components such as the virtual machine image manager Glance, object storage Swift, block storage Cinder, virtual network Neutron, identity authentication service Keystone, control panel Horizon, etc.

OpenStack Control Panel (Horizon)

OpenStack Control Panel (Horizon)

The following two figures show the architecture of Nova using Docker and Xen as virtualization solutions respectively.

Docker is called by OpenStack Nova’s computing component

Docker is called by OpenStack Nova’s computing component

Using Xen as OpenStack’s computing virtualization solution

Using Xen as OpenStack’s computing virtualization solution

Conclusion

The need for task isolation has given birth to virtualization. We all hope that task isolation is more thorough, and we don’t want to lose too much performance. From the slowest but impractical simulation execution with the highest isolation, to the dynamic binary translation and hardware-assisted virtualization used by modern full virtualization technology, to the paravirtualization that modifies the virtual machine system, to the operating system-level virtualization based on shared kernel and containers, performance has always been the first driving force of the wave of virtualization technology. However, when we choose virtualization technology, we still need to find a balance on the scale of isolation and performance according to actual needs.

The ancient computing virtualization technology, coupled with the relatively new storage and network virtualization technology, constitutes the cornerstone of the cloud operating system. The nine-story platform starts from the earth. When we enjoy the seemingly inexhaustible computing resources in the cloud, if we can peel off the layers of packaging and understand the essence of computing, maybe we will cherish the computing resources in the cloud more, and admire the grandeur and delicacy of the virtualization technology building more, and respect and worship those computer masters who lead mankind into the cloud era more.

References

- Understanding Full Virtualization, Paravirtualization, and Hardware Assist, VMWare, http://www.vmware.com/files/pdf/VMware_paravirtualization.pdf

- A Talk on Virtualization Technology, IBM, http://www.ibm.com/developerworks/cn/linux/l-cn-vt/

- Formal requirements for virtualizable third generation architectures, CACM 1974

- Various network pictures (sorry for not citing one by one)