'Google's Internet Ambitions'

A few days ago, I gave an internal technical sharing session, and the opinions of my colleagues were diverse, so I decided to discuss it with everyone. This article will discuss Google’s network infrastructure plans—Google Fiber and Google Loon, as well as Google’s exploration in network protocols—QUIC, with the ambition to turn the Internet into its own data center.

Wired Network Infrastructure—Google Fiber

The goal of Google Fiber is to bring gigabit internet into thousands of households. With gigabit speed, downloading a 7G movie only takes one minute (if you are still using a mechanical hard drive, you probably won’t have time to store it). Currently, this project is only piloted in two cities in the United States, Kansas and Provo. Google Fiber in these two cities offers three packages, taking Kansas as an example: [1]

- Gigabit network + Google TV: $120/month

- Gigabit network: $70/month

- Free monthly network: 5Mbps download, 1Mbps upload, free monthly rent, but a $300 initial installation fee is required.

The third plan is not as fast as the services provided by most telecom operators in the United States, and most households have already purchased TV services from cable TV operators, so for families that can afford it economically, the comparison of the three packages highlights the “value for money” of the second package ($70/month gigabit network).

There are two points worth arguing here:

- Can everyone have such a fast gigabit network technically?

- Can the $70/month fee recover the cost of Google building a gigabit network?

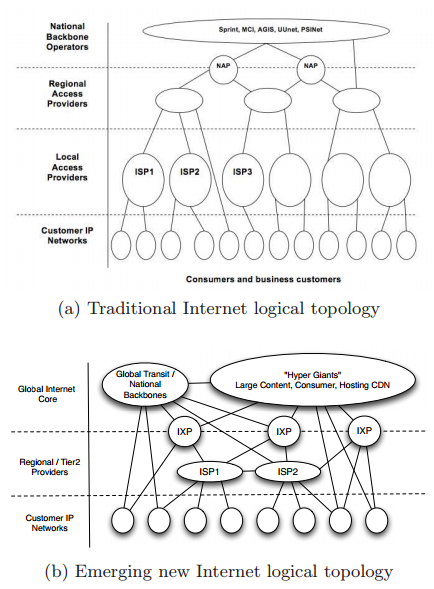

Flattening of Internet Topology

Many universities and companies have gigabit internal networks, which theoretically can reach a download speed of 120MB/s. The outside network is fascinating, but it is generally slower than the campus network. The network cable is always fast, but the operator will limit the network speed. This speed limit is partly to encourage you to buy more expensive packages, and it is also related to the tree-like topology of the public Internet. Suppose all the traffic from the National Education Network to Unicom has to pass through a 10Gbps fiber optic cable, can it be fast?

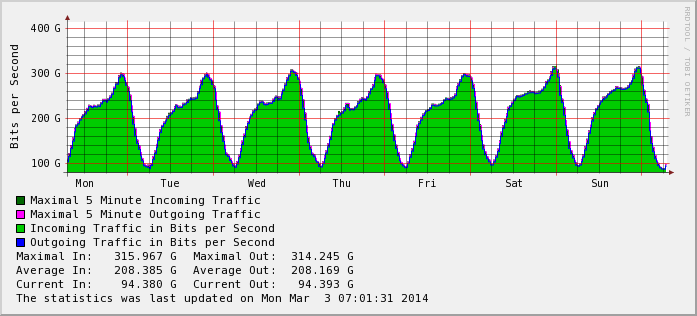

In fact, with the development of Internet giants and CDNs, the topology of the Internet is quietly changing. A paper at SIGCOMM 2010 [2] pointed out that with the rise of large content providers (for example, as early as 2009, Google’s traffic had reached 5% of the entire Internet traffic), the topology of the Internet is gradually flattening. The Internet relies more on IXPs (Internet Exchange Point), which connect different ISPs (Internet Service Providers) together and then connect to large content providers. This “layered mesh” topology effectively solves the network bottleneck across ISPs. Many IXP data are public, such as the traffic graph of Hong Kong IXP [3]:

Turning Cities into Data Centers

However, the above topology changes only occur in the center of the Internet. At the capillaries at the edge of the Internet, the home wired network built by traditional operators is still a tree structure. In fact, in today’s large data centers, there are hundreds of thousands of servers, and each server can still have a 10Gbps network; assuming that all connections are established between any two machines, the total traffic can reach the 10Tbps level (in fact, network connections always have a certain locality, so the actual supportable traffic is larger than this). If such a network topology is applied to a city, a city-scale gigabit “LAN” is not a myth. However, the cost of wiring and network switching equipment will definitely increase, which is why Google Fiber is so expensive.

Why is Google more eager than traditional operators and other Internet companies to bring high-speed networks into the “last mile”?

- Technically, traditional operators do not do data centers, let alone network research. They only know to buy some switches and routers from well-known manufacturers, and then hire some “certified engineers” to configure.

- Google is the world’s largest content provider. For Google, the benefits of Google Fiber not only include users’ monthly Internet fees, but also the traffic costs saved for other products (such as YouTube).

- If the user’s network is fast enough, Google can put many local applications on the Internet, make “downloads” a thing of the past, and further squeeze the living space of non-Internet companies.

- The inertia and laziness of traditional operators.

Therefore, the “gigabit access” promised by Google Fiber is not only technically feasible, but also profitable from the perspective of the entire company.

Mobile Network Infrastructure—Google Loon

Having talked about wired networks, let’s talk about wireless. Whether it’s indoor wireless networks (wifi) or large-scale cellular networks (3G), there are two worries:

- The coverage of wireless networks is limited, and some remote places are not worth investing in building wireless base stations;

- The number of people supported by a single access point is limited, and it will be slower or even unable to connect when there are too many people.

Google Loon [4] is similar to the application scenario of 3G. The balloon uses solar power, floats in the stratosphere about 20km high (clouds and airplanes are at a height of about 10km, so they are not affected by them), drifts with the wind, and forms a huge wireless self-organizing network (wireless mesh network). However, these balloons are not drifting randomly, but using the wind data from the meteorological department, they move up and down in different wind layers in a controlled manner. The balloons move at different speeds and directions with the air of different layers, ensuring that the trajectory of the balloon is basically controlled.

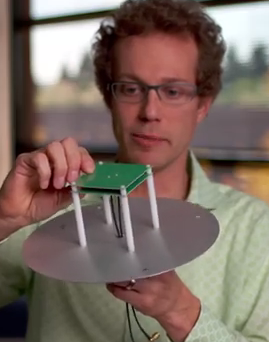

Each balloon has a diameter of about 15 meters, and the wireless network can cover a range of about 40km. People on the ground can use a dedicated antenna to receive signals from the hot air balloon. The following picture is the receiving antenna (from YouTube), where the green part is the signal receiver, and the silver metal is the reflector. This structure ensures that no matter which direction the hot air balloon is in the sky, there will be signals directly or reflected into the receiver.

Using a dedicated receiving device instead of wifi, in addition to the signal strength, may also be for efficiency considerations. The wireless network is a broadcast channel. If everyone speaks at the same time, no one can hear clearly. The wifi protocol is suitable for small self-organizing networks with less than 30 people. Each person waits for a random time to request to speak when they hear the surroundings quiet. If this random waiting time is short, everyone’s request to speak may collide; if the random waiting time is long, the utilization rate of the channel will decrease. The fundamental reason why protocols like 3G controlled by base stations can support more users is the coordination of the base station. Whether it is frequency division, time division, or code division, it is the base station that decides who should speak at what time, and it is impossible to collide, which improves the utilization rate of the channel.

Each balloon has two antennas working in different frequency bands, one for communication with ground users or base stations, and one for communication between balloons. This is an important way for wireless self-organizing networks to achieve high efficiency. If a single frequency band is used, communication between balloons and communication with the ground will compete for the same channel, resulting in the efficiency of the wireless network being inversely proportional or even exponentially decaying as the number of hops increases. This is why wireless self-organizing networks built with single-frequency wireless routers are difficult to work efficiently, but Google Loon has avoided this problem.

Google Loon can naturally achieve large-scale remote network coverage at a lower cost. Another use that few media mention is to dynamically adjust the number of access points to solve the problem of insufficient bandwidth for large-scale events, or to provide emergency networks in the event of natural disasters. For example, if a grand event is held on a vast grassland, it is obviously neither economical nor too late to temporarily build a large number of 3G base stations. But Google Loon can gather a large number of hot air balloons to this area in advance to provide sufficient bandwidth. Google has a patent describing its implementation [5].

Accelerating the Evolution of Network Protocols - QUIC

Network authority Scott Shenker said at the 2013 Open Networking Summit that network protocols are always patching up, without consistent theoretical principles. Operating systems and compilers have a complete set of theories, while networks are a patchwork of protocols. The main reason is that every change in network protocols involves hardware and may involve multiple institutions. When faced with new needs, they can only patch up instead of designing a more reasonable architecture. Therefore, he proposed that SDN (Software Defined Networking) is the direction of network development, and software is always easier to modify than hardware.

It is precisely because a data center is managed by a company, and they can do whatever they want, that most of the innovations in wired network protocols are currently in data centers. However, Google’s ambition is not limited to improving the efficiency of its own data centers, but wants to improve the efficiency of the entire Internet. Obviously, Google does not have the ability to unite all network equipment manufacturers and operators, so they have to bypass them. Fortunately, Google is the world’s largest Internet content provider, and holds the largest market share of the browser Chrome and mobile operating system Android, and can hollow out part of the network protocol stack and innovate protocols at the application layer.

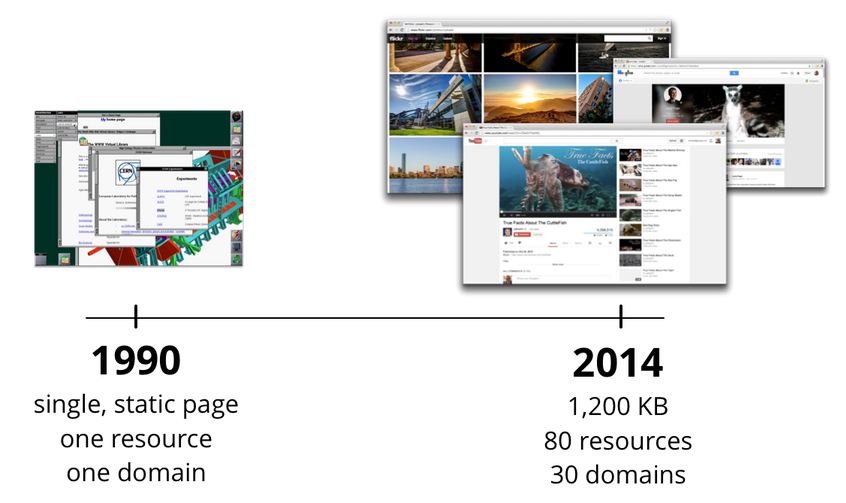

What’s wrong with the current HTTP + SSL/TLS + TCP + IP protocol stack? (Image source: [6])

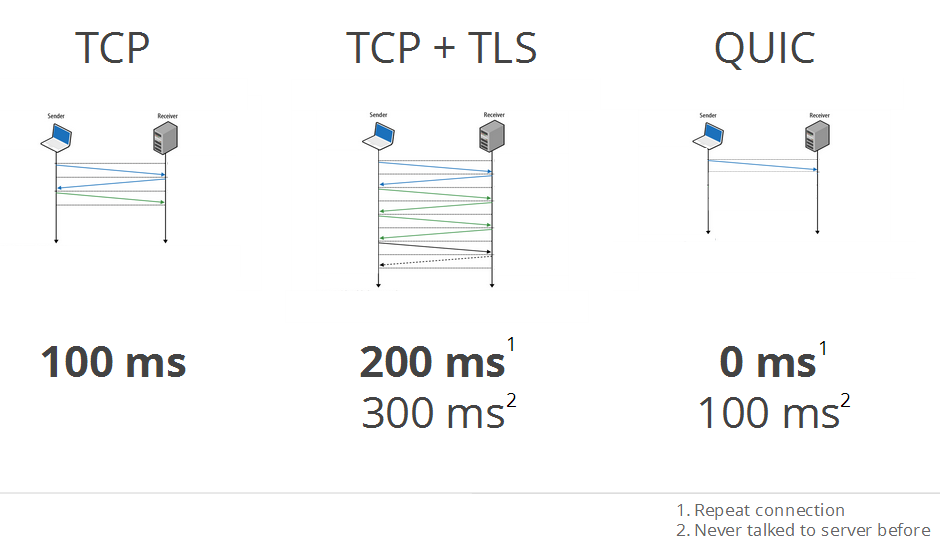

- HTTP does not support multiplexing, modern websites often have dozens of resources (JS, CSS, images) on a single page, initiating dozens of TCP connections, the handshake overhead is too large; using a single TCP connection for serial operations, the waiting time is too long.

- SSL/TLS needs to handshake for each TCP connection separately, each handshake needs to go two rounds, which is adding insult to injury for TCP connection handshake.

- The congestion control algorithm in the TCP protocol is a general compromise algorithm and cannot fully utilize the characteristics of various networks. For example, for high-bandwidth, high-latency networks (long fat pipelines), it first needs a long time to exponentially increase the window size. Once packet loss occurs, the window size has to be halved. If you know the characteristics of the network in advance, you can implement more precise congestion control, and even reserve bandwidth for some real-time applications (such as video).

- The IP protocol uses IP addresses to locate hosts, which is no problem in wired networks, but in wireless networks, because users are mobile and may constantly switch between different wireless networks, the IP addresses used for routing will also change constantly. Automatic reconnection when switching networks is just a stopgap measure, it obviously cannot simultaneously use two wireless networks (wifi, 3G).

QUIC (Quick UDP Internet Connections) protocol is based on UDP, bypassing TCP, that is, canceling the “connection” based on IP address. What replaces it is the session ID in the header of each QUIC packet, using the session ID to identify the connection, which is the same principle as browser cookies. Since the QUIC connection is determined by the session ID, the data packet can be sent to the QUIC server through any route, and QUIC can simultaneously use multiple wireless networks (wifi, 3G), and seamlessly switch between wireless networks. This is similar to the principle of Multipath TCP.

A QUIC connection is multiplexed, which means multiple HTTP requests to the same website can be transmitted simultaneously in one QUIC connection. The entire QUIC connection only needs to handshake once, saving the overhead of SSL/TLS + TCP where each HTTP request has to establish a connection and handshake two to three times. (As shown in the figure below, the picture is from [6])

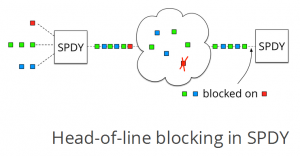

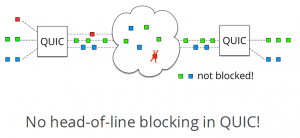

In the QUIC protocol, each HTTP request is numbered, and the packets within each HTTP “connection” also have sequence numbers, which allows the packets sent by each HTTP “connection” to be distinguished and use separate retransmission and congestion control strategies. This solves the Head-of-Line blocking problem in the TCP-based SPDY protocol (this is a multiplexing protocol for HTTP proposed by Google, which will be included in the HTTP/2.0 standard), that is, the packet at the head of the queue is blocked due to packet loss, and all packets in the queue, whether related or unrelated, will be blocked. In QUIC, packet loss will only block the HTTP request where the packet loss occurred.

The longer-term significance of QUIC is to accelerate the evolution of network protocols. In the current network, designs such as bandwidth estimation, rate limiting with packet pacing, and sending redundant check packets to tolerate packet loss are difficult to promote because they involve the kernel protocol stack. But QUIC bypasses the hard nut of TCP, puts the work of TCP to the application layer, and can use the new protocol as long as the browser is upgraded or even a browser plugin is installed, shortening the evolution cycle of network protocols from “years” to “weeks”.

Internet == Google Data Center?

Google Fiber has achieved gigabit access to households, Google Loon has covered the network to places where “gigabit access to households” cannot cover, and QUIC is optimizing network transmission and accelerating the evolution of network protocols. They form a complete chain, controlling every link from the user end to the Google data center, or Google is incorporating the user’s terminal into its own data center. Of course, Google Fiber and Loon will face policy pressure and opposition from operators, but if Google can overcome the resistance and complete these projects, it will become a more powerful monopolist in the Internet era than the WinTel alliance in the PC era—because this time Google does both hardware and software, while Microsoft did software and Intel did hardware, at least there was a division of labor.

Data centers are originally a large-scale project combining hardware and software. Hundreds of thousands of machines need a reasonable network topology, as well as customized network protocol stacks in the operating system and control paths in network switching devices, and a reliable central controller to collect global status and schedule network traffic. If the “center” is stable enough, centralized control is always more efficient than distributed control, which may be a fact that libertarians are unwilling to admit. Google’s ambition is to turn the Internet into its own data center and do the “central controller” itself. As for whether this goal can be achieved, let’s wait and see.

References

- Google Fiber plans and pricing: https://support.google.com/fiber/answer/2657118?hl=en

- Internet Inter-Domain Traffic, SIGCOMM 2010

- http://www.hkix.net/hkix/stat/aggt/hkix-aggregate.html

- http://www.google.com/loon/

- Balloon Clumping to Provide Bandwidth Requested in Advance, US Patent

- QUIC @ Google Developers Live, February 2014