How Internet Videos are Delivered to Every Household

A few days ago at a student gathering, someone raised a question: Why is it that many people can watch TV without lag, but watching live video on the internet lags? Television is broadcast (essentially the same as radio), while internet video is delivered via point-to-point IP networks. For each additional viewer, the server has to send an additional set of data.

So how exactly is internet video delivered to every household? I’ve stolen some popular science knowledge from the top academic conference in the communications field, SIGCOMM 2013, to share with everyone.

The Evolution of Internet Video

First Stage: 1992 ~ 2004

This was the era when internet video was just starting. At that time, videos were mainly internal to organizations, so everyone was working on the network, using methods such as reserving bandwidth and optimizing packet scheduling to ensure smooth video.

For scenarios like multi-person video conferencing, researchers had long thought of the problem that each additional viewer requires an additional set of data, so IP Multicast was born. The video server only sends one packet, marked with multiple target addresses, and the network device will copy the packet several times and send it to each target.

The layered structure of video was born in this era:

- Network Abstraction Layer: Video transmission protocol or video file format, such as RTP/IP for real-time network video, MP4 for video file storage, MMS for streaming media, MPEG-2 for broadcasting. The extension of the video file is this layer.

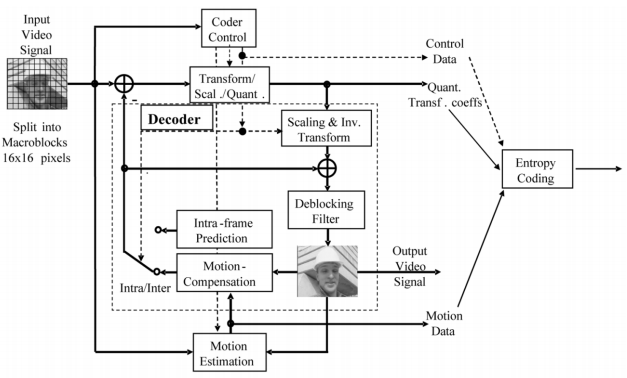

- Video Coding Layer: This layer determines how the video stream is encoded and compressed. The process is complex, this is the legendary codec, such as MPEG-2, MPEG-4 ACP, H.264/AVC, H.265/HEVC. Generally, the newer the encoding standard, the higher the compression rate under the same quality, and the more computing resources required for encoding. As shown in the figure below, the codec only specifies how to decode but does not specify how to encode. Generally, audio streams and video streams are encoded independently.

h264-1

h264-1

In this era, the PC market was just starting, and various companies were launching their own video standards to seize the blue ocean. The Web was still purely for web browsing, and video was still confined to the client. Video was distributed in the form of entire files using P2P technology represented by BitTorrent.

Second Stage: 2005 ~ 2011

The founding of Youtube in 2005 marked the beginning of the online video era. More and more traditional TV stations are broadcasting on the internet, and grassroots video creations have broken the taboo of TV media.

In this era, Flash was the de facto standard for internet video technology. It could work on all operating systems and browsers, using Adobe’s proprietary RTMP protocol. Flash was also constantly improving, such as the Flash Player 9.0 + ActionScript 3.0 in 2006, which adopted a new virtual machine and JIT compiler, increasing the execution speed of Flash by more than 10 times.

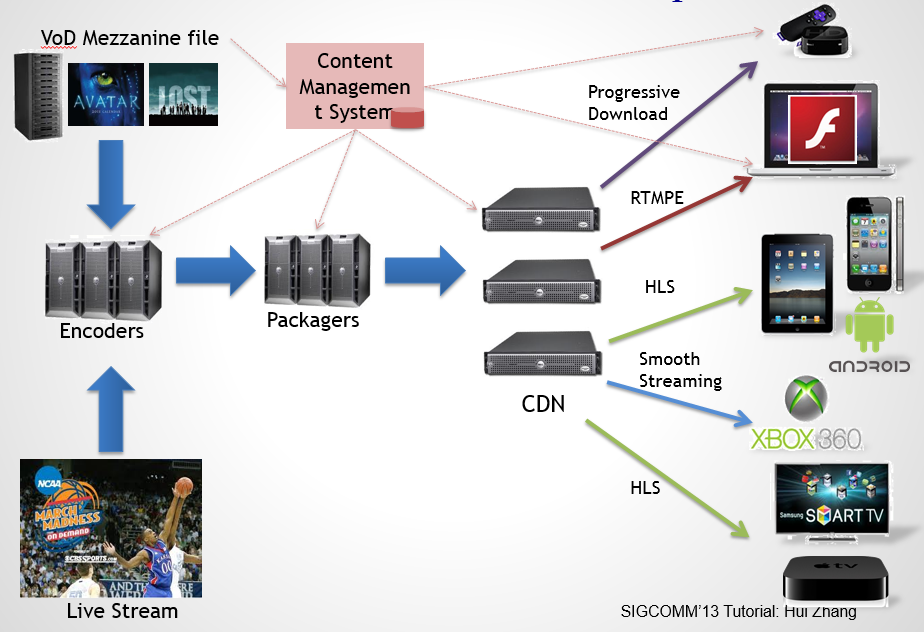

CDN (Content Delivery Network) technology was maturing, so a single server no longer had to send video data to all clients. Instead, the video data could be sent to CDN nodes, which would then send it to clients nearby, reducing the load on a single machine and, more importantly, increasing the speed at which users accessed videos.

Third Stage: 2011 to Present

In 2010, the mobile internet wave hit. Neither the iPhone nor the iPad support Flash, and Adobe announced in 2012 that it would stop supporting Flash on the Android platform.

Now, internet video has to consider four different screens: PCs, smartphones, tablets, and smart TVs. User behavior and video applications vary on different screens. Not to mention the multiple operating system platforms such as Windows, Mac, GNU/Linux, Android, iOS, Windows Phone, etc.

The Evolution of Video Distribution Protocols

First Generation: HTTP Download

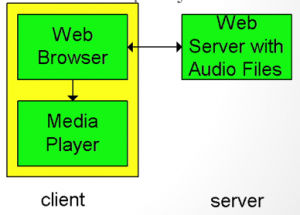

The simplest solution is for the browser to download the video completely and then hand it over to the player for playback. This not only can’t live stream, but also requires a long wait to watch a video. So improvements were made:

- The browser downloads a meta file

- The browser launches the corresponding player and hands over the meta file

- The player establishes a TCP connection with the server to download the video file, playing while downloading

The following example code uses this improved scheme:<video poster="http://www.html5rocks.com/en/tutorials/video/basics/star.png" controls> <source src="http://www.html5rocks.com/en/tutorials/video/basics/Chrome_ImF.ogv" type='video/ogg; codecs="theora, vorbis"' /> </video>

However, simple HTTP will download as fast as possible. For example, if my internet speed is 10Mbps and the video’s bitrate is only 1Mbps, then buffering will accumulate more and more, wasting system resources and network bandwidth.

Furthermore, HTTP downloads a large file at a fixed bit rate, which cannot be dynamically adjusted according to network conditions. If the network conditions are poor, I would rather watch standard definition than endure buffering to watch high definition.

Second Generation: Proprietary Streaming Protocols

Proprietary streaming protocols represented by Adobe’s RTSP and RTMP are based on TCP or UDP, not HTTP. Generally, there is a control connection and a data connection (like FTP). The control connection provides native support for streaming media to start, reset, fast forward, pause, etc., and no longer needs to simulate with HTTP Range Request.

The architecture of the browser side is no different from the improved scheme of the first generation. The server side maintains the connection status.

However, the licensing price of proprietary streaming servers is high, and the proprietary ports used are easily blocked by firewalls.

Third Generation: HTTP Streaming

Compared with the second-generation technology, HTTP streaming has changed the port number to 80 and the underlying protocol to HTTP. The most important difference is that the client maintains the status and control logic, and the server does not need to maintain the status. This is essentially a dispute between “thin client” and “fat client”. As the server performance becomes more and more problematic with the increase of users, and the client computing resources are surplus, many web applications have transferred the things originally done by the server to the client, which also improves the response speed of operations.

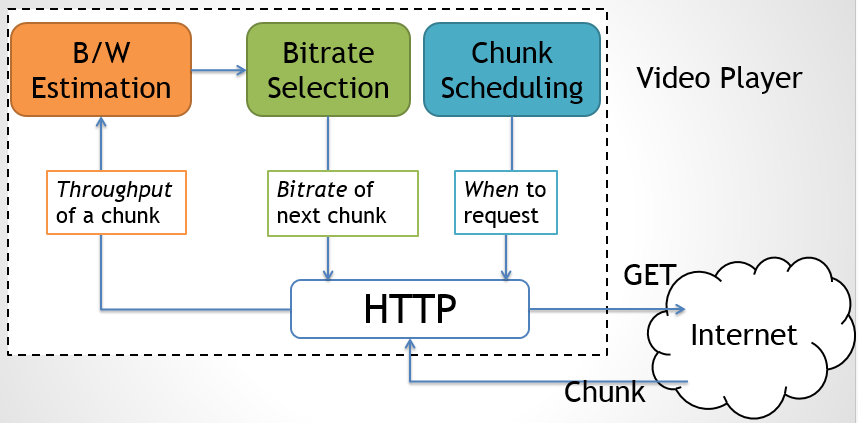

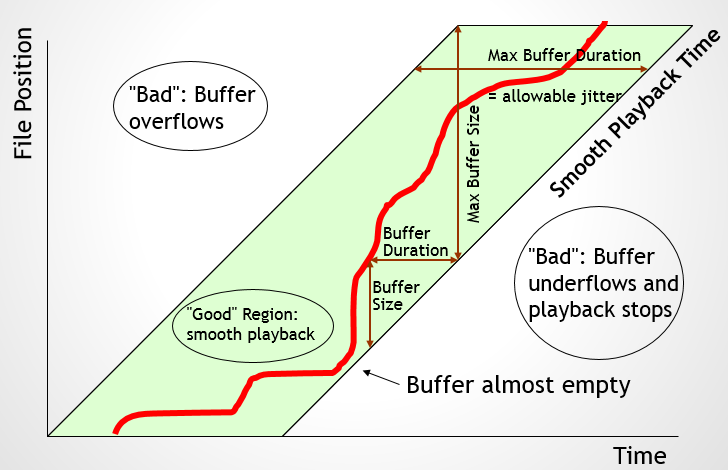

The server pre-converts the video content into different bit rates, divides it into blocks, and each block starts with a key frame, so the blocks are independent of each other. The client decides which bit rate to adopt through a “feedback loop”. Estimate the bandwidth based on the download speed of a block (the orange block in the upper left corner of the figure below), and select the highest feasible bit rate under the current bandwidth (green). When the client decides to download a new block (blue), it will download the next block at the current bit rate.

Why Videos Buffer

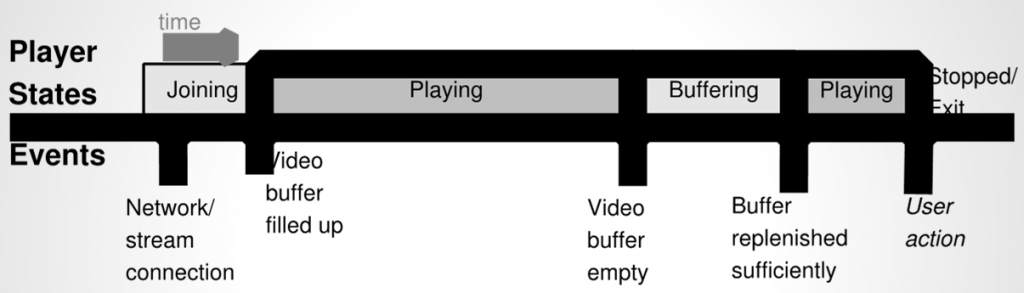

Everyone knows that internet videos have “buffering”. When you start watching a video, you first buffer some content for a certain period of time, then download new content while playing. If the playback speed is higher than the download speed, when there is no playable content, it will pause to download content until it has buffered content for a certain period of time, and then resume playback.

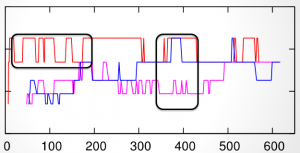

As mentioned above, the client will dynamically adjust the bit rate through the “feedback loop”. Unfortunately, experimental results show that this feedback does not make the bit rates of all clients tend to balance, but instead, there is a jitter like the figure below (the horizontal axis is time, the vertical axis is bit rate, the three lines are three clients, and the black box circles the part with severe jitter)

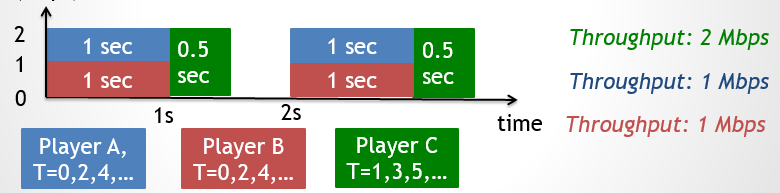

Another problem is the fairness between clients. It should be said that no matter when you start downloading, the bit rate you get should be similar. But consider the following situation, clients A and B start downloading at the same time, and client C starts downloading at another time. Ideally, the download speeds of A and B are always 1Mbps, and the download speed of C is always 2Mbps. According to the “feedback loop” algorithm, the bit rate seen by A and B is always lower than C.

The reason is that the high-level HTTP protocol is used, which only knows the overall download time of a video block, and has no way of knowing the delay of each packet; on the other hand, each client “does its own thing” without central coordination. Therefore, the stateless protocol based on HTTP is not a panacea, and there are gains and losses.

Measuring the Quality of Video Distribution

The traditional video distribution quality test is to calculate the signal-to-noise ratio of the video, and then send a large number of survey questionnaires to ask the audience to score, and see the correlation between these two sets of data; in the Internet era, sending survey questionnaires is outdated, and more objective indicators are used, such as the duration of the video being played (if the video quality is not good, users will quit halfway); academic indicators such as signal-to-noise ratio are no longer used, and they are replaced with buffering time, average bit rate and other indicators that users can actually feel.

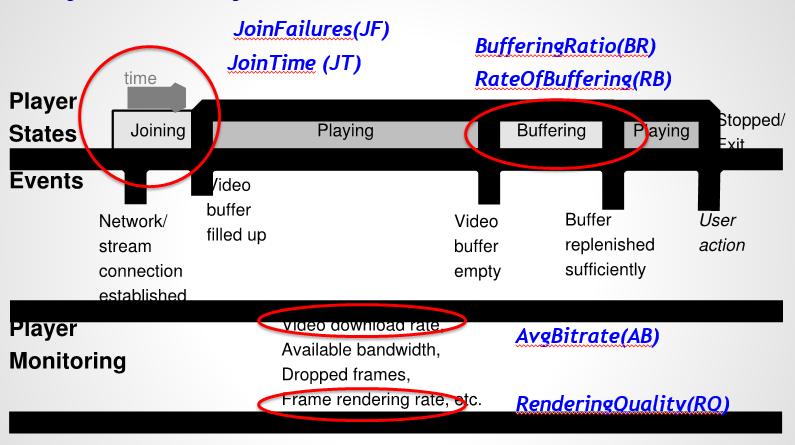

In fact, mainstream video websites will quietly upload some statistical information, including the number of times the start of playback fails, the buffering time of the start of playback, the buffering ratio (the proportion of buffering time during playback), buffering speed, average bit rate, display frame rate, etc.

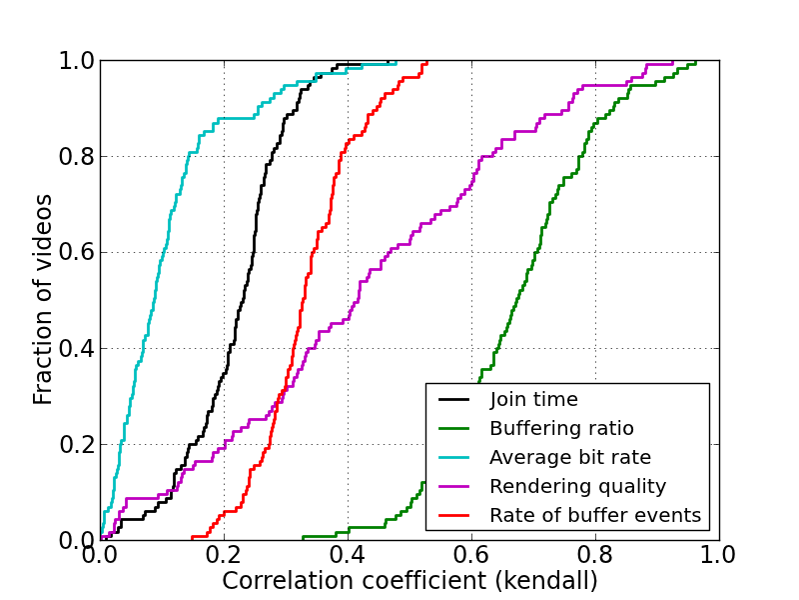

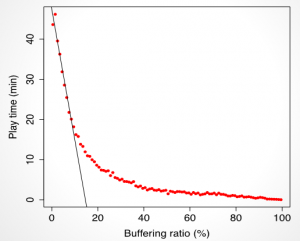

Using the proportion of the duration of the video being played as the vertical axis, and various indicators as the horizontal axis, you get the curve shown in the figure below:

Obviously, the buffering ratio has the greatest impact on user experience, which is more important than the start of playback time, bit rate, display frame rate, etc. Another study shows that for every 1% increase in the buffering ratio, the user’s playback time will decrease by 3 minutes.

Of course, correlation does not imply causation. Is it possible that a combination of factors is more important than any single factor? Is there a correlation between these factors? Methods such as information gain can be used to delve deeper. (This reminds me of the course on probability theory and mathematical statistics…)

Which CDN node should be used?

In the environment where the interconnection between operators is extremely poor, CDN is particularly important. Simply think about it, downloading nearby is definitely better than downloading from a distance. The question is, large video websites will deploy CDN nodes in multiple places and multiple ISPs, how to determine which node is “nearby”?

Due to the need for management, the Internet divides IP addresses into thousands of “autonomous regions” (Autonomous System), each autonomous region has a unique number (ASN). An autonomous region generally belongs to an organization, for example, the University of Science and Technology is an autonomous region. If two clients are located in the same autonomous region, it can be basically confirmed that they belong to the same operator.

Just based on the autonomous region number (ASN) is not enough, because the same autonomous region may span the north and south of the river, so different geographical locations should visit the CDN node that is geographically closer. Therefore, it is also necessary to divide several target user areas (Designed Market Area, DMA) based on the GeoIP database.

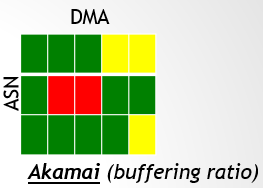

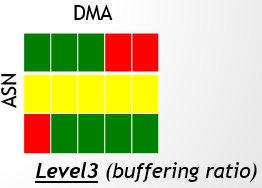

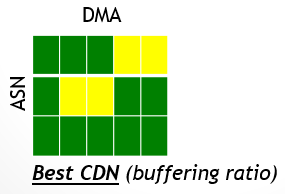

For each (ASN, DMA, CDN) triplet, count indicators such as card-to-play ratio, start play time, failure rate, etc., and get the following pictures (each side is a CDN node):

For each (ASN, DMA) combination, take out the best CDN node, and you will get the desired CDN distribution plan. Of course, the impact of time is not discussed here. Some areas have different network conditions during the day and night, and more fine-grained analysis can be done at this time.

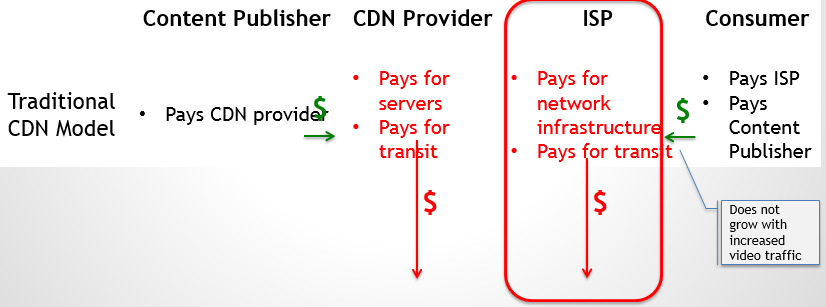

The ecosystem of Internet video

A few months ago, several operators jointly claimed that WeChat would charge fees, which caused a public opinion storm. In fact, we should look at this issue from the perspective of an ecosystem, because any link in the chain cannot do a loss-making business. For example, Internet video is an ecosystem composed of copyright providers, platform providers, CDN providers, network operators, end users, advertisers, and other entities.

As video occupies an increasingly large proportion of Internet traffic (it has now reached more than half and is still growing), the pressure of Internet video on the entire Internet infrastructure can no longer be ignored. The co-evolution of all parties in the ecosystem will be the inevitable trend of Internet video development.

This article reviews the evolution of Internet video, the evolution of video distribution protocols, discusses the reasons and solutions behind video stuttering, and how to choose CDN nodes. We also see more and more diversified client platforms, a larger video market, and a series of problems that the existing network architecture brings to video distribution. I am not a professional in this field, and I am not very knowledgeable. I welcome criticism and/or in-depth discussion.

References

- Internet Video: Past, Present and Future. http://conferences.sigcomm.org/sigcomm/2013/ttliv.php

- Overview of the H.264/AVC video coding standard. http://ip.hhi.de/imagecom_G1/assets/pdfs/csvt_overview_0305.pdf